Load Testing: Think Time, Pacing, and Delays

What is Think Time in Performance Testing?

Think time in load testing refers to the simulated delay or pause between consecutive user actions during a performance test. It represents the time that a user spends thinking, reading, or otherwise being inactive after completing one action and before initiating the next one. Think time is introduced into the test scenarios to make them more realistic and to mimic the natural behavior of actual users interacting with the application.

For example, consider an e-commerce web app scenario where a user selects a product tile. Next, they navigate to the product display page and take some time to consume and read the content on that page before eventually clicking on the “Add to Cart” button. The duration elapsed between clicking on the product tile and clicking on “Add to Cart” is referred to as think time.

When people think of load testing, they often picture large numbers of users bombarding a site or API all at once. While that’s part of the story, think time is just as important. It helps simulate actual user journeys like browsing for products, logging into accounts, or completing purchases. Each action has its own natural pause, and factoring these into your tests ensures your application is prepared for real-world user behavior.

Advantages of Think Time

- Realism: Including think time replicates the natural behavior of users who don’t interact with a system continuously. This enhances the realism of load testing scenarios, making them more reflective of actual user experiences.

- User Simulation Accuracy: Think time helps in accurately simulating user behavior during the testing process. Users typically spend time reading content, making decisions, or contemplating actions, and think time allows for the simulation of these natural pauses.

- Performance: Think Time releases resources on the Execution Server machine between requests. This enables other Virtual Users (VUs) on the Execution Server to send their requests, preventing the Execution Server machine from experiencing I/O constraints.

- Improved Troubleshooting: Realistic think time scenarios assist in detecting performance bottlenecks that may not be apparent in scenarios without pauses. It helps uncover issues related to user sessions, session management, and overall system responsiveness.

When to Use Think Times

Incorporating think times in your load testing can be difficult because every user is different. On the bright side, think times can be flexible and you need to consider a range for your think time values. For example, some users may take longer to read or enter data into a form than others.

You’ll want to incorporate think time in between these actions to replicate the length of time when a person receives a response more accurately from your server to the time the person requests a new page. Your think time will depend on your user scenarios, and you should determine your think time value ranges using data from your website or application. You’ll want to determine the median amount of time users spend on your pages.

What is Pacing in Performance Testing?

Pacing is used in load tests to ensure that the test is conducted at the intended transactions per second rate. It is the time gap between each full iteration of the business flow. This helps to regulate the number of requests sent to the server per second.

The Importance of Introducing Delays in Load Testing

Testing the application’s performance before its widespread release helps prevent potential inconveniences for end users, like timeouts, slow page responses, and downtime. To ensure realistic test results and uncover any issues, incorporating think time and pacing into our test scenario design is essential.

For example, when we introduce think time between each concurrent user action, the server utilizes this delay to address pending tasks in the queue. It executes the next task before revisiting the previous one. This closely mirrors the typical production scenario with real users. Additionally, incorporating think time extends the user’s time spent on the application, revealing any issues related to the server’s capacity to handle concurrent users effectively.

Calculating Delays for Testing Applications

The number of concurrent virtual users, delays, and transactions per second (TPS) varies for each application. To calculate what the delays should be for your application, you can use the below formula:

- Load test duration (in seconds) * (TPS + Delays) * Concurrent Users Count = Total Transactions

For example, let’s consider generating 100,000 transactions with a response time of 5 seconds each during a 10-minute test (600 seconds). To determine the required concurrent users with a 3-second think time, use the formula: 100,000 / (8 * 10 * 60), resulting in approximately 21 users. This approach helps identify the necessary delays and user counts for effective load tests.

Setting Up Load Testing Delays with LoadView

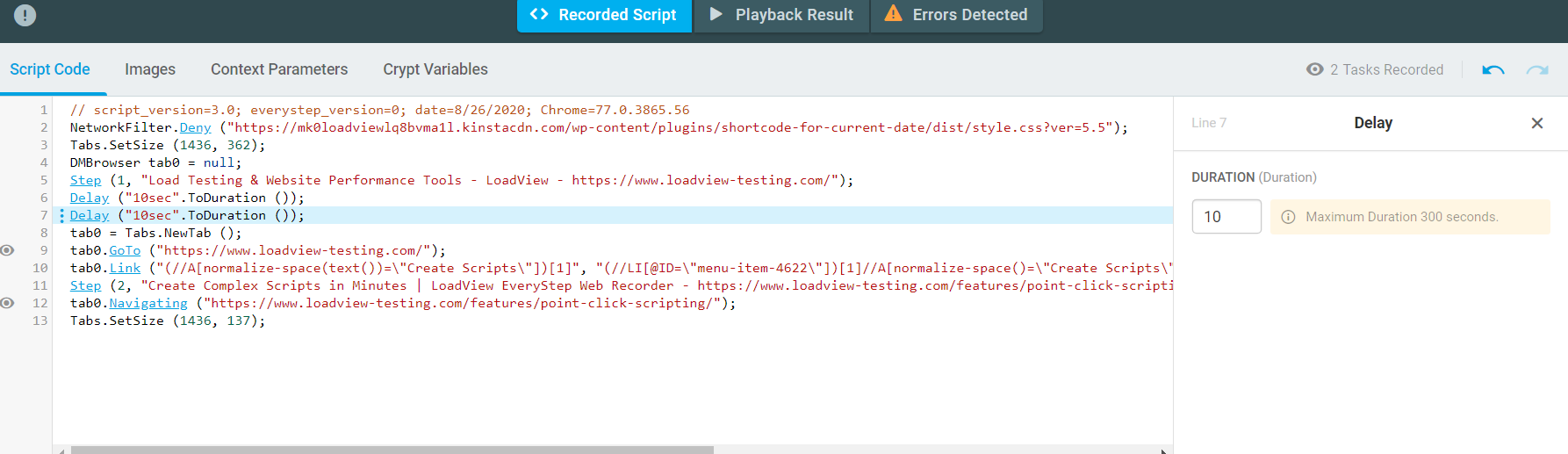

LoadView provides you with the EveryStep Web Recorder which streamlines the creation of test scenarios by recording user actions in a browser. It faithfully replicates the user’s steps, capturing data points such as selectors, actions, and delays. During your test scenario creation, it’s essential to emulate your authentic user journey, including think time delays. After recording, the tool generates a script that can be rerun with the specified number of concurrent users. The script is customizable, allowing users to modify and update delays for individual steps as required for testing, as demonstrated in the image below. Explore further on how to edit EveryStep Web Recorder scripts.

The optimal approach to achieve precise results in a load test involves creating a script that simulates real user interactions with the application and captures the user journey.

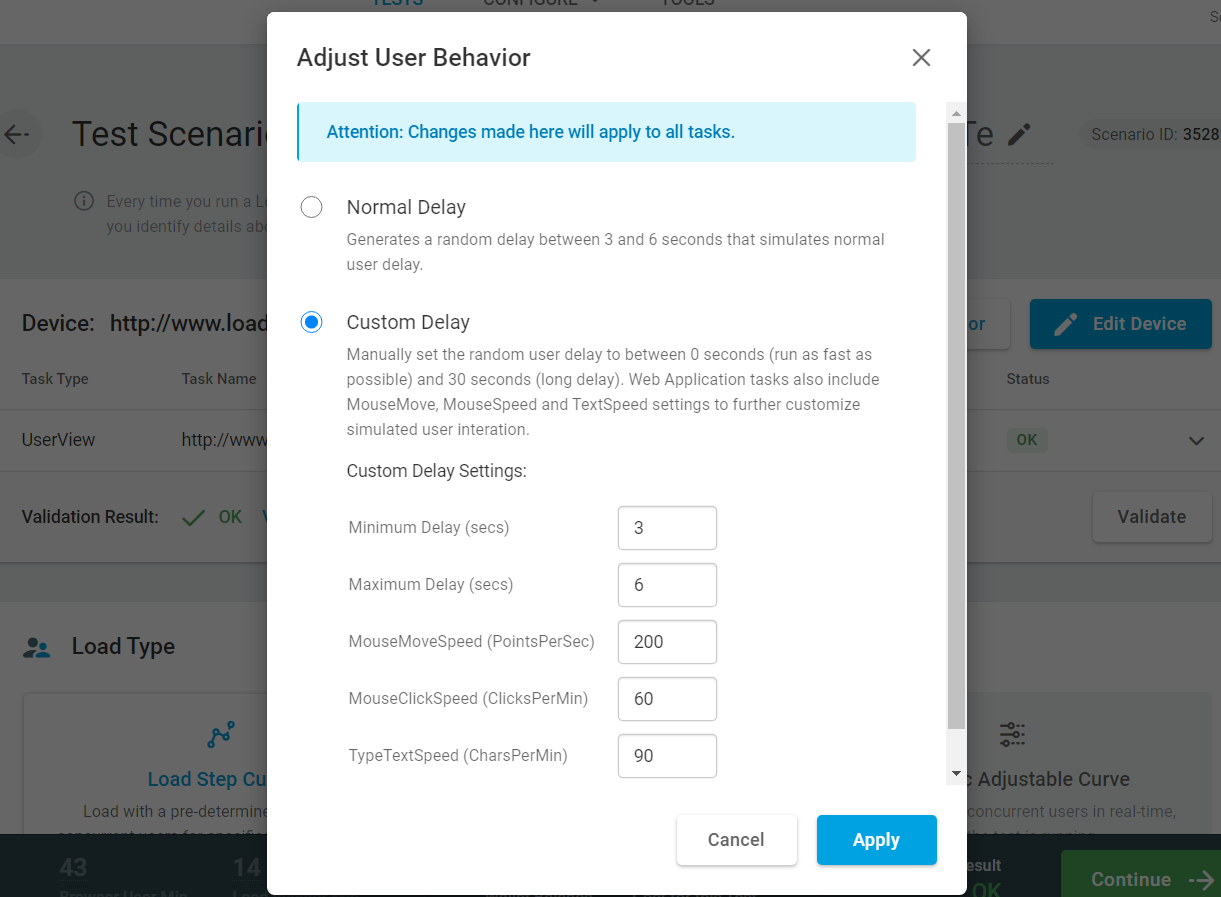

With LoadView, you’ll also have the option to modify the user behavior when load testing. As you can see in the image below, you can choose Normal Delay or Custom Delay to set specific user behavior and delays for your applications.

Conclusion: Think Time, Pacing, and Delays

Conducting performance testing on your application is crucial before its deployment into production. The effectiveness of this process in identifying accurate performance-related issues relies on adhering to best practices and developing test scenarios that encompass real user actions within your application.

This article explored the significance of think time, pacing, and delays in load testing. By incorporating these elements into your load test designs, issues such as page timeouts, slow page responses, response time discrepancies, and server errors can be detected well in advance, even under high loads. Adopting these strategies contributes to the development of responsive and reliable applications and websites. LoadView can help streamline this process and you can easily incorporate think time, pacing, and delays into your load test scenarios to get the most out of your testing efforts. When you sign up for LoadView today, you’ll get all the load testing benefits that the platform has to offer, and you can do your first initial tests for free!

Next Level

Experience unparalleled features with limitless scalability. No credit card, no contract.