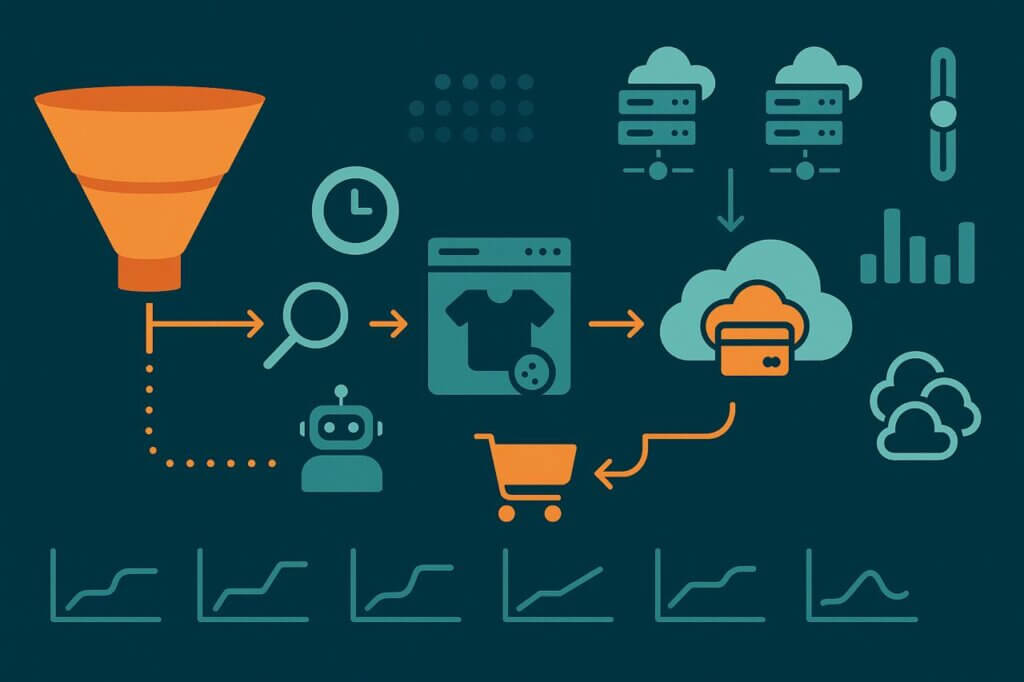

Ecommerce sites don’t behave like ordinary websites. They don’t just deliver content, they facilitate intent. A shopper isn’t reading a blog post or browsing a static catalog—they are searching, filtering, comparing, adding to cart, and sometimes purchasing. Each of these steps generates distinct traffic patterns, and together they shape the real load your backend must survive. If you simply point a load testing tool at checkout and hit “start,” you miss 90% of what users are really doing. Worse, you may test (and then optimize) the wrong systems, leaving real bottlenecks untouched.

This article walks through how to build ecommerce-specific load tests. We’ll cover the unique characteristics of ecommerce traffic, practical ways to model flows like browsing and purchasing in the right proportions, common mistakes that undermine realism, and best practices that elevate your tests from generic stress checks to business-relevant insights. Lastly, we’ll also touch on how to carry these same scenarios into monitoring for continuous assurance.

What Makes Ecommerce Traffic Unique

The first step is understanding how ecommerce differs from other web traffic:

- Burstiness around events. Ecommerce traffic isn’t steady-state. Black Friday, flash sales, and influencer-driven campaigns produce sharp peaks, sometimes 10x or 50x baseline load within minutes. Generic test ramps don’t capture this volatility.

- Browsing vs. buying mix. Most visitors never buy. Industry averages put conversion rates between 2% and 5%. That means 95%+ of sessions are browsing-heavy, hitting product list pages, search endpoints, and recommendation APIs.

- Inventory-driven flows. Traffic behavior shifts depending on stock. When an item is out-of-stock, some users drop off, while others browse alternatives. Checkout traffic is not a constant.

- Multi-step funnels. Unlike content sites where a pageview is the event, ecommerce sessions span multiple requests across login, search, product detail, cart, and checkout. Each step stresses different systems.

- Third-party dependencies. Modern ecommerce stacks are federated systems. Payment gateways, fraud checks, tax/shipping APIs, and recommendation engines all add latency and risk. A realistic test must hit these external calls, not just your internal endpoints.

Together, these make ecommerce one of the hardest categories to test realistically. The diversity of behavior is the point.

Key Ecommerce Traffic Patterns to Model

When creating load test scenarios, it’s a good idea to think beyond “all users checkout.” Because as we know, most users don’t checkout. Instead, you should capture the spectrum of ecommerce user behaviors. This includes:

Browsing Heavy traffic

These are the majority of sessions—users arriving from search engines, ads, or social media. They may view category pages, filter results, and click into product detail pages. In aggregate, this is the heaviest load on your content delivery, caching, and catalog APIs. Browsing traffic stresses read-heavy parts of the stack and reveals where CDNs, caching layers, or slow database queries can bottleneck.

Searchers

Search heavy sessions are unique in load testing. Unlike browsing static category pages, search often bypasses caching and executes CPU heavy queries against product databases. For retailers with large catalogs, search endpoints are among the highest risk systems under load. A test that doesn’t emulate heavy search traffic risks missing your biggest choke point.

Cart Abandonment

Studies show over 60% of online shopping carts are abandoned. Simulating this traffic matters because it stresses cart persistence, session storage, and database writes, even though the user never completes checkout. If your load test models only successful purchases, you overlook a major category of real traffic.

Buyers

Buyers are the minority but the most business-critical. Their flow touches checkout, payment integrations, shipping calculators, tax APIs, and fraud detection. Load testing this flow validates revenue-critical infrastructure. Even at 2–5% of traffic, failures here directly translate into lost sales.

Bot-Like Surges

Flash sales, sneaker drops, and limited edition releases often create traffic patterns that resemble bot attacks: thousands of users (or bots) hammering checkout in a very short window. These surges produce unique contention in cart services, inventory management, and payment gateways. Modeling them is essential if you ever run time limited promotions.

Together, these patterns form the backbone of realistic ecommerce traffic simulation.

Approaches to Simulating Ecommerce Traffic

Random Scripting Pitfalls

Load tests often randomize page hits without constraint. The result is chaos: 50% of sessions might “teleport” straight to checkout, or the same item ID may be requested 10,000 times in a row. Randomness alone is not realism—it creates noise and hides bottlenecks.

Controlled Proportions

A better approach is to weight flows. For example: 70% browse-only, 20% cart, 8% checkout drop-offs, 2% purchase. These ratios should come from your analytics data, not guesses. Google Analytics, Clicky, or server logs provide the baseline. Once you define the mix, configure your load testing tool to assign flows with those weights. This ensures browsing remains the dominant load driver while checkout is tested proportionally.

Session State Modeling

Users don’t reset every click. A realistic script maintains state: the same session searches, views, adds, and maybe purchases. Carrying cookies, cart contents, and authentication tokens produces load that stresses the right subsystems. Some tools support this natively; others require scripting logic.

Inventory Scenarios

Inventory adds complexity. When products go out of stock, behavior shifts: users refresh, try alternatives, or abandon carts. Simulating this requires scripting conditional flows: if “add to cart” fails, retry or redirect. These scenarios mirror the frustration loops of real users during high demand.

Think Times

Real people pause. A 3–7 second think time between actions separates human like load from robotic floods. Randomized think times within a range avoid robotic uniformity. Without this, throughput looks inflated and unrealistic.

Location and Device Distribution

Simulate where and how users connect. 70% mobile Safari traffic in the US behaves differently from 30% desktop Chrome in Europe. Load tests that ignore this distribution miss CDN latency issues, mobile-specific performance problems, and regional gateway bottleneck. LoadView is great for utilizing multiple locations from around the world.

Best Practices for Building Load Test Scripts

Designing a load test for ecommerce isn’t just about throwing traffic at the system—it’s about shaping that traffic to resemble real users as closely as possible. A good script balances fidelity with flexibility, pulling from analytics data while also introducing enough variability to surface edge cases. The following best practices create a foundation that makes your tests both realistic and repeatable:

- Anchor in real data. Build flows from analytics, not intuition. If 80% of your traffic is mobile Safari, your test mix should reflect that.

- Model ramp-up/ramp-down. Traffic rarely appears instantly. Ramp from baseline to peak in a curve, then drop or sustain. Match historical campaigns.

- Introduce controlled randomness. Randomize product IDs viewed, but keep the proportions constant and randomize think times along with that.

- Exercise third party dependencies. Include calls to payment gateways, tax/shipping APIs, recommendation services. Many outages occur here.

- Monitor error codes, not just latency. 502s from a payment API matter more than 50ms slower image load. Instrumentation should track both.

Following these principles keeps your testing aligned with how customers actually behave. Instead of synthetic traffic that only stresses one narrow path, you get a more holistic picture of performance across journeys, geographies, devices, and dependencies. That’s the difference between finding issues in the lab and catching them when your revenue is on the line.

Common Mistakes to Avoid When Simulating Ecommerce Traffic

Even well-intentioned load tests can miss the mark if they don’t reflect how ecommerce systems actually behave under pressure. Teams often fall into predictable traps that make their results look cleaner than reality and leave blind spots in critical parts of the stack. Some of the most common pitfalls include:

- Assuming everyone buys. Conversion rates are low. Modeling 100% buyers inflates checkout testing and ignores real browsing load.

- Ignoring search. Search APIs often consume the most CPU but are left out of tests.

- Overlooking caching. First-time pageviews vs. repeat hits stress caching differently. Be sure to test both.

- Skipping edge cases. Promo codes, cart errors, and multi-currency flows matter. They often fail at scale.

- Treating load testing as one-off. Ecommerce evolves weekly with promotions. Tests must be continuous, not annual.

Avoiding these mistakes is as important as following best practices. When your tests cover the messy realities such as abandonments, caching quirks, and unpredictable edge cases, you can uncover the vulnerabilities that would otherwise appear only in production. That’s where load testing shifts from being a checkbox to being a real safeguard for revenue.

Example Ecommerce Load Testing Scenarios

Holiday Sale Simulation

Traffic surges to 10x baseline. 40% of sessions hit checkout. Test focuses on payment gateways, fraud detection, and shipping integration. Teams should also validate that marketing driven redirects and promo-code validations don’t collapse under load.

Normal Weekday Flow

80% browse, 15% cart, 5% purchase. Load is steady, but volume is high. Stresses product search, category browsing, and recommendation APIs. Realistic weekday flows often highlight caching misconfigurations that don’t show up in checkout-only tests.

Flash Drop

Within seconds, 70% of users attempt checkout. Bottleneck is often inventory service or cart write contention. This test surfaces how your stack behaves under concentrated, spike like pressure. For example, does the system serve stale inventory, reject gracefully, or collapse outright?

Regional Sale

Simulate a campaign targeted at one geography, such as Europe only promotions. This tests CDN edge nodes, VAT/tax APIs, and localized payment gateways. It’s common for regional gateways to be under-provisioned compared to global ones.

Bot Simulation

Add synthetic traffic that mimics scraping or automated carting behavior. This validates how your anti-bot protections interact with legitimate users during promotions. Sometimes the “fix” for bots also blocks customers.

Role of Load Testing Tools

Modern platforms like LoadView make proportional traffic scripting possible. Weighted scenarios let you declare, for example, “70% browsing, 20% cart abandoners, 10% buyers.” Session persistence, geo-distribution, and think times can be built into scripts. This transforms load testing from brute-force HTTP flooding to user-journey simulation.

These same scenarios can then be reused in synthetic monitoring (with a tool like Dotcom-Monitor). Instead of blasting checkout endpoints daily, you can run a balanced set of flows continuously at low frequency. This validates not only uptime, but the actual business workflows users depend on. A balanced approach avoids false alarms while keeping visibility sharp.

Future of Ecommerce Traffic Simulation

Ecommerce complexity is accelerating. Headless commerce APIs, AI-driven personalization, and dynamic pricing change traffic patterns in real time. Tomorrow’s load tests must account for personalization engines, recommendation calls, and edge compute layers. Geo-distributed models will matter even more as sites serve audiences across continents with latency-sensitive content.

Dynamic content also means less cacheability. Personalization reduces cache hits, increasing load on origin servers. If your load tests still assume 80% cache hit rates, you’re missing the true cost of personalization. Similarly, AI-driven recommendation engines often depend on external APIs or GPU-heavy inference models—both of which behave unpredictably under scale.

The rise of mobile-first shopping adds further nuance. Load patterns now include app-specific APIs, push notifications, and deep links from external campaigns. Testing must extend beyond web flows to cover mobile app journeys.

By treating traffic simulation as an evolving discipline—not a static playbook—teams can stay ahead of these shifts.

Conclusion

Load testing ecommerce is not about load times under stress bragging rights—it’s about realism. If you simulate traffic that doesn’t match your users, you test the wrong bottlenecks, fix the wrong problems, and risk failure when it matters most. The right approach blends browsing, searching, cart abandonment, and buying in the proportions your data shows. It incorporates geography, device mix, and third party dependencies. And it carries those same flows into monitoring, so you know not just that your site is “up,” but that your revenue critical journeys are actually working.

Taking the time to properly simulate ecommerce traffic is an investment in truth. When you do that, your load tests reveal the actual breaking points that matter for revenue. If you don’t, you’re left in the dark, and this can really impact your bottom line.