AI agents are changing what “load” means. Traditional load testing was built for web pages, APIs, and transactions—systems that behave predictably under stress. AI-driven workloads don’t. Their inputs vary in length, complexity, and context. Their processing is probabilistic, not deterministic. Their performance depends as much on GPU scheduling and token generation as on network latency or backend throughput.

That shift breaks the assumptions most load tests were built on. You can’t treat an AI agent like another API endpoint. Each request is a conversation, not a click. Each response depends on the last. And each session grows heavier as context accumulates.

To keep these systems reliable, performance engineers need a new playbook—one that understands how to simulate concurrent reasoning, not just concurrent traffic. This article outlines modern, AI-powered strategies for testing agents at scale and keeping them performant as complexity rises.

Performance Challenges in AI Agent Load Testing

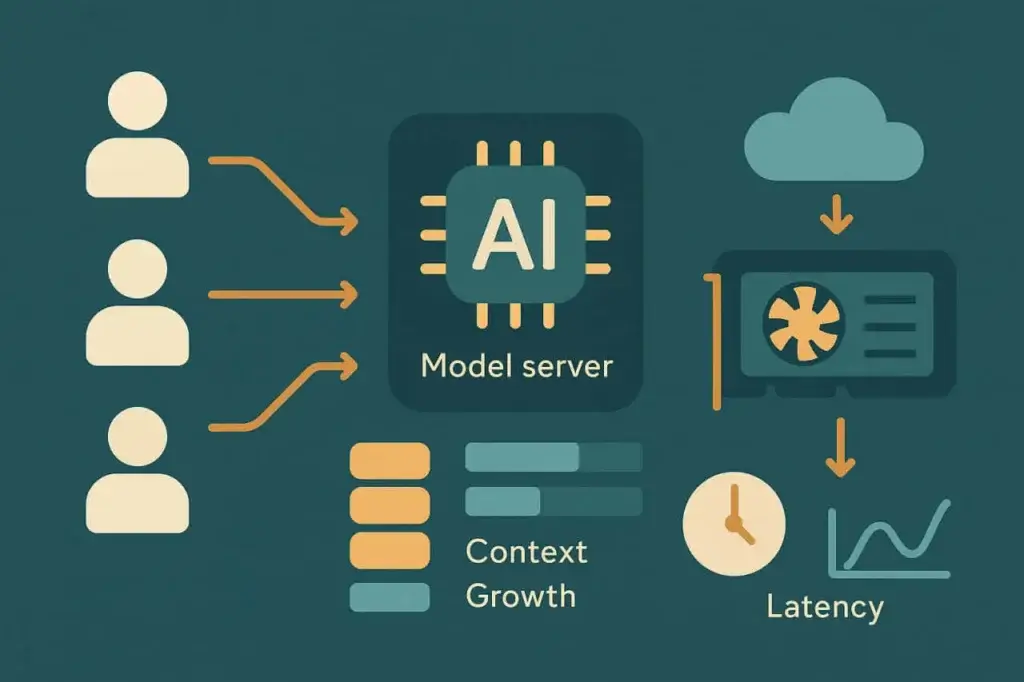

AI workloads don’t behave like web or mobile traffic. Every “user” in an AI-driven system can represent a series of chained operations: a prompt expansion, retrieval of relevant context, model inference, and post-processing or tool execution. The load isn’t fixed—it evolves with each turn in the interaction.

As these layers stack, performance degradation becomes nonlinear. A 2x increase in concurrent users doesn’t mean 2x the latency—it might mean 5x, depending on model load, memory, and GPU allocation. Traditional load testing metrics like requests per second or average response time miss the underlying dynamics. What matters here is latency elasticity—how performance bends as sessions multiply.

There are several recurring stress factors in AI agent systems:

Context Accumulation

Every user query carries historical context—sometimes thousands of tokens of prior conversation or document data. As context length grows, prompt size inflates and model inference time rises. At scale, this creates unpredictable latency spikes and queueing pressure on GPUs.

Compute-Bound Scaling

Unlike web servers, large model inference can’t always scale horizontally. Model weights and context windows consume fixed GPU memory, exceeding capacity means queuing requests or falling back to smaller models. That makes concurrency limits far stricter than CPU-based systems.

Retrieval Latency

Many agents pull external data—via vector databases, APIs, or document stores—before generating a response. These dependencies add I/O latency and become the first bottleneck under burst traffic.

Session Persistence

Traditional load tests replay stateless requests. AI sessions are stateful. Each one carries memory, embeddings, and cached context. The longer the conversation, the heavier the session footprint.

These factors combine into a new kind of stress profile. The system may appear healthy at 100 concurrent users but buckle at 120, not from bandwidth exhaustion but from GPU queue saturation or context cache overflow. Load testing AI systems means revealing where those nonlinear inflection points begin.

Understanding AI Agent Workload Behavior

Before designing tests, it helps to model how an AI agent actually behaves under the hood. Most production agents follow a similar pipeline:

- Input ingestion. User sends a query or message.

- Context assembly. The agent collects relevant data from prior turns or an external store.

- Model inference. The assembled prompt is sent to a local or remote model endpoint.

- Post-processing. The output may be parsed, validated, or enriched before returning.

- Response delivery. The agent updates UI state or sends an API response.

Each stage adds variability. Inference time scales roughly with input and output token counts. Retrieval latency depends on database proximity and cache hit rates. Context assembly cost rises with every turn in the conversation.

To make sense of performance behavior, you have to observe how these dimensions interact. For instance, doubling prompt length might raise average inference latency by 60%, but concurrency beyond a certain threshold might raise it 300%. Those curves matter more than any single metric.

Load testing AI systems is partly statistical. You’re not just measuring throughput—you’re building response distributions. The tails of those distributions—the 95th and 99th percentile latencies—tell you when the model or infrastructure begins to saturate. That’s where most user-facing slowdowns originate.

In practice, mapping workload behavior means running progressive ramp tests. Start with a handful of concurrent sessions, capture baseline latency, then scale up incrementally. Watch how token throughput, queue times, and GPU utilization respond. Every agent has its own scaling signature, and finding it is the first step toward reliable operations.

Core Metrics to Measure in AI Agent Load Tests

Traditional metrics—RPS, TTFB, error rate—still apply, but they don’t tell the full story. AI agent load testing introduces new metrics that reflect how intelligence, not just infrastructure, scales.

Inference latency measures the total time from prompt submission to completed model response. It’s the most direct performance signal but must be tracked alongside input size and model type. Comparing raw averages without context size normalization can be misleading.

Context scaling quantifies how latency grows as the prompt window expands. Engineers can plot response time against token count to visualize the cost curve. A well-optimized system shows linear or sublinear scaling, while poorly optimized systems show exponential spikes beyond certain context thresholds.

Token throughput—tokens processed per second across concurrent sessions—reflects both performance and cost efficiency. Since most APIs bill per token, throughput decline translates directly into cost inefficiency.

Dependency latency captures delays from supporting systems: vector search indexes, knowledge bases, or plugin APIs. These can dominate total response time even when inference is fast.

Concurrency stability measures how the system behaves under simultaneous load. Does latency grow predictably? Do error rates stay bounded? Or do response times oscillate wildly as queues form?

By combining these metrics, teams can construct a holistic picture of performance. The aim isn’t just to measure speed—it’s to understand where and why degradation starts.

Designing Effective Load Tests for AI Systems

With the metrics defined, testing strategy becomes about simulation fidelity. AI agents don’t serve identical requests, so recording a single transaction and replaying it under load is useless. Each synthetic user must represent variation—different prompts, lengths, and behaviors. The goal is not uniformity but realism.

1. Model the full reasoning pipeline, not just the endpoint

Real users don’t hit /generate in isolation. They authenticate, submit context, invoke retrieval, and then generate output. A credible load test mimics that sequence. Skip a layer, and your data becomes meaningless.

2. Parameterize prompts to reflect real diversity

AI systems slow down as input length or semantic complexity increases. Use variable prompt templates that adjust token counts, sentence structures, or embedded context depth. This surfaces how scaling affects response time distribution.

3. Ramp concurrency gradually

AI backends often queue requests at the inference layer. Instead of spiking instantly to 1000 users, ramp gradually in defined stages—say, 10 → 50 → 100 → 200—holding each for several minutes. The resulting curve reveals where GPU or thread saturation begins.

4. Track cost alongside performance

Unlike web servers, inference APIs incur per-token cost. During load tests, calculate cost-per-request at each concurrency level. Performance tuning should include economic efficiency—fast but expensive models can fail at scale financially even if they pass technically.

5. Include retry and timeout behavior

AI endpoints often rate-limit or degrade under heavy use. Simulate client retry logic to observe compounding load effects. A naive exponential backoff can double effective traffic when failures spike.

These strategies replace the old “record and replay” model with a behavioral simulation mindset. Instead of testing transactions, you’re testing cognition—how the system thinks and scales simultaneously.

AI-Powered Load Testing: Let the Models Help

Ironically, AI can help solve the very problem it creates. Modern testing platforms are beginning to embed machine learning models directly into their analysis loops, producing what’s often called AI-powered load testing.

Here, AI plays three roles:

Prompt generation

Rather than manually crafting hundreds of test inputs, a generative model can produce prompt variations that simulate real user diversity. It can adjust tone, structure, and context automatically, exposing the system to a broader range of stress patterns.

Anomaly detection

AI models can detect statistical drift in performance data faster than static thresholds. Instead of alerting when latency exceeds a fixed limit, anomaly models learn normal variance and surface outliers that signal genuine degradation.

Predictive saturation analysis

By analyzing historical load data, AI can forecast when a system will hit its next performance cliff. Regression models or time-series predictors identify nonlinear scaling patterns before they break production.

The benefit isn’t magic automation, it’s acceleration. Engineers spend less time on repetitive maintenance and more on interpreting why performance shifts. AI-powered load testing turns manual scripting into adaptive experimentation.

Implementing AI Agent Tests in LoadView

AI agents may be cutting-edge, but they still communicate through familiar protocols—HTTP, WebSocket, or REST APIs. That means you can test them with the same infrastructure LoadView already provides.

API-based testing is the foundation. Each agent request, regardless of complexity, ultimately resolves to an API call—often JSON over HTTPS. LoadView’s API testing lets teams simulate thousands of concurrent inference requests, each parameterized with dynamic payloads. You can vary prompt size, inject context, and measure latency end-to-end.

UserView scripting extends that simulation through the user interface. Many AI agents live inside dashboards, chat portals, or SaaS integrations. With LoadView, you can record full workflows—login, prompt entry, response rendering—and replay them from distributed cloud locations. This approach validates both backend and frontend behavior under real browser conditions.

Scalable orchestration ties it all together. LoadView’s cloud network distributes tests across geographies, letting teams see how global traffic affects AI endpoints that depend on centralized GPU clusters. By correlating response times with geographic distance, you can separate network latency from model latency.

Analytics and reporting complete the feedback loop. LoadView tracks all standard performance metrics but can also be customized to capture certain types of data. That combination turns synthetic testing into an observability layer for AI performance.

In other words, you don’t need a new testing platform for AI systems—you need smarter test design inside an existing one. LoadView already has the primitives, and this new class of workloads simply demands a different testing philosophy.

The Future of AI Load Testing

AI systems aren’t static services—they’re adaptive, stochastic, and continuously retrained. That means their performance characteristics shift even when infrastructure stays the same. A model update that improves accuracy might double inference time. A prompt change that boosts coherence might explode context size. Load testing must evolve to account for those moving targets.

Future performance testing will blend simulation, analytics, and self-learning feedback loops. Tests will adapt in real time, expanding or narrowing load based on observed model stability. Instead of “run test, read report,” engineers will maintain continuous performance baselines that update as models drift.

The focus will move beyond throughput. The key question won’t be “Can it handle 1000 users?” but “Can it think consistently under pressure?” Measuring cognitive stability—how reasoning quality degrades when demand spikes—will become a standard metric.

For organizations deploying AI copilots, chat assistants, and automated decision agents, this evolution is already underway. The systems are new, but the principle remains timeless: you can’t improve what you don’t measure. Load testing has always been the discipline of exposing hidden limits. AI just adds a new kind of limit—the boundary between performance and intelligence.

Load Testing for AI Agents – Wrapping It All Up

AI agents introduce a new dimension to performance testing. They combine heavy computation, dynamic context, and unpredictable scaling. Traditional load testing scripts can’t keep up, but AI-powered approaches can. By focusing on inference latency, context scaling, and concurrency stability—and by using tools like LoadView to simulate full reasoning workflows—teams can preserve reliability even as intelligence becomes the core of their systems.

The next era of load testing won’t just measure how fast systems respond. It will measure how well they think when everyone asks at once.