When it comes to load testing, there’s always the million-dollar question, “In terms of performance, what does the client want to do with their application and system?” I’m pretty sure that you will never get an easy answer to that question, and most of us always assume some things and carry out the performance testing, which may end up missing testing critical pieces, and end up with an unhappy client. An unhappy client means loss of business to your organization and a declining career as performance engineer. But do not worry, in this article we are going talk about creating a checklist which will help you with those questions.

This checklist is more like a step-by-step process which you can follow and adapt to your performance testing life cycle. In the discussed scenario, we can’t always blame our clients, because initially they might not know what the exact performance outcomes they want, but they have a clear knowledge on how the application works under different conditions. A good performance tester can manage this ambiguity with a well-defined questionnaire. And that is the very first item on the checklist, the requirement gathering questionnaire.

Requirement Gathering Questionnaire

Below is a questionnaire format which you can follow in your project. We need to get as many answers as possible for these questions. It will be better if you can have a call to discuss these questions. Make sure the application architect or developer is joining the call with you and the client. Having additional personnel can help provide insight and uncover questions that you may not have previously considered. The questions below provide you with a roadmap to creating a more efficient and effective load test.

| No | Topic | Questions |

| 1 | Application | Type of application to be considered for testing (For example, web application/ mobile application, etc.). |

| 2 | Application architecture and technology/platform. | |

| 3 | Load balancing technique used. | |

| 4 | Communication protocol between client and server (For example: HTTP/S, FTP). | |

| 5 | Performance testing objective. Performance metrics to be monitored (For example, response time for each of the actions, concurrent users etc.). | |

| 6 | Critical scenarios to be considered for performance testing. | |

| 7 | Details of background scheduled jobs/process, if any. | |

| 8 | Technique used for session management and number of parallel sessions supported. | |

| 9 | Customer SLA/ Requirements | Expected number of concurrent users and total user base. User distribution for scenarios in scope. |

| 10 | SLA for all transactions in scope for PT (expected throughput, response time, etc.). | |

| 11 | Types of performance testing to be performed (Load testing, endurance testing, etc.). | |

| 12 | System/Environment | Test environment details (web/app/DB, etc., along with number of nodes). Production-like environment recommended for performance test execution. |

| 13 | Comparison of production environment and performance test environment. | |

| 14 | Whether application to be isolated during performance test execution to avoid interaction with other application. | |

| 15 | Details of built-in logging mechanism or monitoring mechanism. | |

| 16 | Others | Application performance baseline results. |

| 17 | Current performance issues, if any. | |

Answers to these questions will help you to create a quality test strategy/test plan. If the client isn’t able to provide answers to all these questions, no need to worry. We can always explore the application under test and find the answers. For example, if an APM/log tool is in place, we can derive concurrent users, throughput, and response time from production system. Never assume that you will get what you need without asking.

Find a Suitable Performance Testing Tool

A performance tester should carefully choose the best performance testing tool. There are lot of factors which need to consider before you finalize and propose the tool. Remember, Client budget is always a major factor while choosing the performance testing tool.

If you’re looking for a performance testing tool which is cost effective, easy to use, and can provide a complete performance solution, you should definitely try LoadView. Compared to traditional load testing tools, LoadView does not require any costly investment, time-consuming setup, or previous knowledge of scripting frameworks. Additionally, the platform provides more than 20 global locations to spin-up load injectors from, so you can test and measure performance from multiple locations during each test.

All types of performance testing require time and effort. Performing load testing can save an organization from potential humiliation, but the same time tests on free open-source tool like JMeter won’t justify the investment. When it comes to truly understanding website or application performance from the end user’s perspective, protocol-based tests, are not enough. You must also measure performance of the client-side interactions and steps. Here is a comparison of LoadView against other performance/load testing solutions and why should you choose LoadView for your performance testing requirements.

When it comes to slow loading sites and applications, customers can become quickly impatient and will leave and find a replacement if your site/ application doesn’t meet their expectations. Knowing how much your site/ application can handle is very important due to various reasons, but there are several significant scenarios that the LoadView platform can assist with:

- Adaptability and scalability. Determining why your site or application slows down when multiple users access it.

- Infrastructure. Understanding what hardware upgrades are needed, if any. The cost of implementing additional hardware, and maintaining it, could be a potential waste of resources.

- Performance requirements. Your site or application can properly handle a few users, but what happens in real-world situations?

- Third-party services. See how external services behave when normal, or even peak, load conditions are presented.

Performance Testing Plan/Strategy

Once you have gathered client requirements and selected suitable performance testing tool, we need to document our test plan/test strategy. Make sure to get the test plan sign-off from client before you start any performance activities. This is very important, and it will help you to avoid any unnecessary glitches later. These are some points that need to be included in the test plan.

- Performance Test Objectives. What we are going to achieve.

- Project Scope. What is the scope of project, example: Number of scripts, how long we need to test, Etc.

- Application Architecture. Application details such app server, DB server, you can include architectural diagram if you have it.

- Environment Details. Details about the environment we are going to test. It’s always good to have an isolated environment for performance testing.

- Infrastructure Setup. Initial setup for the performance testing (for example, cloud environment, tool installation, etc.).

- Performance Test Approach. How we’re going to carry out the test. We should start with a baseline test with a smaller number of users, and then gradually we can increase the users and perform different type of tests like stress, endurance, etc.

- Entry and Exit Criteria. This is very important. We should always start performance testing when there are zero functional defects. Same way we should document when we can stop performance testing.

- Defect Management. We should follow same tool and practices followed by client to log defect related to performance testing.

- Roles and Responsibilities. Details about stake holders involved in the different activities during performance testing.

- Assumptions and Risks. If there are objectives which can be a risk to performance testing, we should document it.

- Test Data Strategy. Details about test data strategy and how can we extract it.

- Test Plan Timeline and Key Deliverables. Timeline of scripting, test execution, analysis, and deliverables for client review.

The actual performance testing relies on a combination of techniques. To achieve the expected goals, we need to prepare a different strategy for different performance testing requirements. There are different metrics, such as user load, concurrent users, workload models, etc., that need to be considered before planning the load. If you have worked on workload modeling before, you would have heard about Little’s law. You should learn it and implement it before planning a test to get the desired results.

Real-time Performance Scripts/Scenarios

Once we’ve agreed on a performance plan or strategy with the client, it’s time to prepare for scripting using the chosen performance testing tool. Building quality scripts is key to successful performance testing, and there are several important considerations to keep in mind throughout the process.

First, always follow the documented test case steps when creating scripts to ensure accuracy. Start by replaying the script for a single user and addressing any correlation or parameterization needs. Parameterization might include headers, cookies, or body parameters. Once the script works seamlessly for a single user, test it with multiple iterations and different user data. Be prepared to adjust correlation or parameterization as new data may uncover additional requirements.

In some cases, achieving certain use cases might require writing custom blocks of code. For instance, you may need to extract specific response data for a user and manipulate it for another request. Additionally, don’t forget to include think time, pacing, and error-handling mechanisms based on the workload model. Verifying the script’s functionality with text or image checks is equally important to ensure the desired outcomes.

Beyond scripting, consider factors like simulating cache, cookies, and varying network conditions to reflect realistic scenarios. As a performance engineer, creating realistic virtual user behavior is critical. This process starts with gathering accurate details during the requirement phase.

Key questions to address include:

- What is the total number of users interacting with the application during business hours?

- What actions do users typically perform, and how often?

- How many users access the application during peak load times?

These answers can be obtained through a requirement-gathering questionnaire or by analyzing statistical data collected from tools like APM solutions or Google Analytics. Transaction analysis is especially helpful in identifying critical business transactions and designing performance test scenarios that accurately reflect real-world usage. By addressing these steps, you’ll be well-equipped to execute effective performance tests and deliver reliable results.

Workload Modeling

We can plan our workload model in different ways. Performance engineers should learn and practice “Little’s law” concept before selecting a workload model. Below are some of the existing workload models. Again, the performance engineer should choose workload model based on the requirements in hand.

Workload Model 1. It’s just a simple model, where number of users will be increased continuously as the test progress. Example: one user per second until the test is completed.

Workload Model 2. In this model, the number of users will be increased like a step for entire duration of the test. For example, the first 15 minutes will be 100 users and next 15 minutes will be 200, etc. We can plan this type of test for endurance testing.

Workload Model 3. This is the most common performance testing model. Number of users will be continuously increased for certain time (We call this the ramp up period). After that, users will have steady state for certain duration. Then users will start ramp down and test will finish. For example, if we’re planning 1.5 hours of testing, we can give 15 minutes for ramping up the users and 15 minutes for ramp down. Steady state will be one hour. When we analyze the results, we will take only steady state for consideration.

Workload Model 4. In this model, the number of users will be increased and decreased suddenly for entire duration. There are different names for this type of testing, like monkey testing, spike testing, etc.

We need to establish a comprehensive set of goals and requirements for performance testing. Define the performance testing parameters, and what constitutes acceptance criteria for each one. If you neither know the test requirements, nor the desired outcome, then the performance testing is a waste of time.

Gathering Data

Below are just some of the very important metrics to observe during performance workload modeling.

Response time: Response time is the time which server took to respond to the client request. There are lot of factors which will affect the server response time. Load test will help to find and eliminate those factors which are degrading response time.

Average response time: This will be average value of response time for entire duration of steady state in a load test.

90 percentile response time: The 90th percentile response time means that 90 percent of the response time falls below that value. For example, if you had 500 requests and each has different response time, then the 90th percentile is any value which 90 percent of all other values are below 90 the percentile. Only 10 percent of response time will be higher than 90 percentile value. This is will be useful if some of your request has huge response time and they are skewing average response time result.

Requests per second: We need to find this value from APM tools or with the help of production logs. Based on the concurrent users we can plan for request per second to server.

Memory utilization: These are infrastructure-side metrics which help you to uncover bottlenecks. Also, you should plan your workload real-time as possible. Make sure we are not bombarding server with continuous requests.

CPU utilization: Same as memory and need to have eye on this metrics while running the performance tests.

Error rate: We should keep track of the passed/error transaction ratio. Error rate should not be more than 2 percent of passed transactions. If the error rates are increasing the as concurrent users are increasing, there may be a potential bottleneck.

Concurrent users: We need to find this value from APM tools or with the help of production logs. Usually, we find this value based on a typical business day.

Throughput: Throughput will show server capacity to handle the concurrent users. There is a direct connection between concurrent users versus throughput. Throughput should increase as the number of users increase to access application. If throughput is decreasing as the number of users increase, it is a possible indication of bottlenecks.

User Load distribution: User load distribution is one of the important factors that needs to be considered while designing workload. If we have five scripts which carry out five different functionalities of an application, we need to split the user load to real as possible based on our investigation on production or APM tools.

These are very important metrics we should keep in mind while designing the workload model. There will be other metrics as well as depends on the client application architecture and requirements.

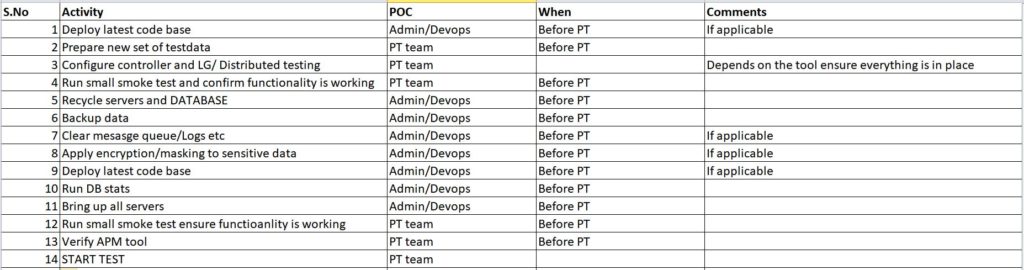

Checklist for Performance Testing Environment (Before Execution)

The checklist example below is usually followed by enterprise-level end-to-end performance testing, where there are a huge number of systems involved. It is always recommended to follow an environment checklist for small scale performance testing as well. Activities will change based on the application environments, as there is a huge difference when we compare it with the application on cloud vs application on-premises.

Conclusion: Load Testing Preparation Checklist

Successful software performance testing doesn’t happen by accident. Architects, product owners, developers, and the performance testing team must work together and address performance testing requirements before assessing the software. For a more detailed background on the LoadView platform, read our Technical Overview article to see how you can leverage your performance testing to the maximum. Performance testing should be an ongoing process. When your website or web application starts growing, you will need to make changes to accommodate a larger user base. There is always a chance of improvement in any application and tests.

We hope this gave you a good insight about the best practices in performance testing. If you would like a full demonstration of the LoadView solution, sign up for a demo with a performance engineer. They will take you step-by-step through each part of the process, from creating load testing scripts and test configuration, to test execution and results analysis.

Or sign up for the LoadView free trial to start running load tests now. We will give you free load tests to get started! Happy load testing!