A Brief Overview of Performance Testing

Performance testing is a critical method of assessing and ensuring this promise. It is a broad term encompassing various testing types, each designed to measure specific aspects of a system’s performance. From load testing that emulates expected user traffic to stress testing that pushes the system beyond its limits, performance testing is an encompassing evaluation of how an application or system fares under different scenarios. These tests dive deep into system behaviors, unveiling potential bottlenecks, slowdowns, and crashes that could impact system availability and functionality.

The Importance of Performance Testing

The importance of performance testing cannot be overstated. In an age where users expect instant response and seamless experiences, even minor performance issues can lead to significant user dissatisfaction, tarnished brand reputation, and, ultimately, loss of business. Performance testing provides the insights needed to optimize system performance, ensuring these crucial systems remain stable and available even under the most rigorous usage.

Moreover, rigorous performance testing is even more critical with systems like financial applications, online gaming platforms, or e-commerce applications that must handle heavy traffic or data loads. It ensures that these systems are robust, scalable, and capable of delivering high performance consistently, even under extreme circumstances.

In essence, performance testing is an essential part of the software development life cycle, providing a proactive pathway for organizations to enhance their systems and applications, thereby ensuring user satisfaction and business continuity.

Most Popular Types of Performance Testing

1) Load Testing

Load testing is a type of performance testing that evaluates an application’s or system’s performance under typical and expected user load. The primary goal of load testing is to understand how the system processes user traffic and transactions, ensuring it remains stable and accessible under these conditions. It is a crucial step in ensuring the reliability and scalability of applications or systems, especially those with high user traffic or those that handle critical business processes.

The process of load testing involves simulating a workload that mimics expected user traffic and transactions. Testers can achieve this simulation by using automated testing tools or manually inputting data and executing transactions. By applying this simulated load, organizations can identify and address performance issues before they impact end-users.

There are various tools available for load testing, each with its unique features and capabilities. Some of the most commonly used include Apache JMeter, Gatling, and LoadRunner. These tools allow for the creation of realistic load scenarios, detailed reporting, and analysis of system performance under load.

Load testing can present several challenges. It requires a thorough understanding of the system’s architecture and the expected user behavior. Selecting an appropriate load testing tool that can simulate realistic load scenarios is also crucial. Interpreting load testing results requires expertise, as it involves analyzing various metrics and understanding their impact on system performance.

Finding Peak Loads

Identifying peak loads is a critical aspect of load testing. The peak load refers to the maximum operational capacity an application or system can handle before its performance degrade or fail. It is vital to understand the peak load to ensure that the application or system can withstand the highest expected user traffic.

Finding the peak load involves gradually increasing the load on the system until it reaches the point where its performance begins to degrade, i.e., response times increase, error rates rise, or resources become fully utilized. The peak load can be different for various applications and depends on factors like system architecture, resources, and the nature of the user requests.

Understanding Load Curves

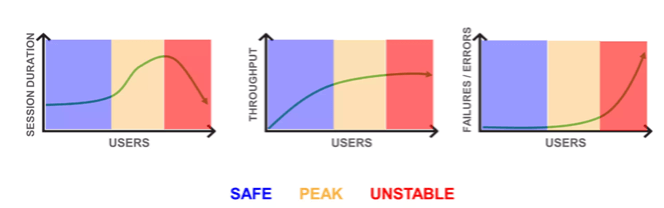

A load curve is a graphical representation of a system’s performance against varying load levels. It plots metrics such as response time, throughput, or resource utilization against the number of users or requests over time. This curve helps to visualize how the system behaves under different load conditions.

Figure 1: Finding the System’s Peak Load

Load Curves

An ideal load curve should be relatively flat, indicating that the system’s performance remains stable as the load increases. However, as the load increases beyond the system’s capacity (peak load), the curve begins to rise steeply, showing a degradation in performance.

Understanding the load curve is crucial for interpreting the results of a load test. It helps to identify bottlenecks in the system, understand its behavior under different load levels, and determine its scalability and capacity planning needs. The load curve can also guide system optimizations and improvements to handle increased load effectively.

2) Stress Testing

Stress testing is a critical performance testing methodology that focuses on determining how an application or system performs under extreme load conditions, often exceeding its maximum capacity. The fundamental purpose of stress testing is two-fold. Firstly, it discerns the system’s absolute limit or its breaking point, post which it can no longer function as expected. Secondly, stress testing aims to understand how the system recovers from these intense stress situations, often called its resilience or robustness.

Moreover, stress testing is paramount for identifying potential performance issues that may arise under extraordinary circumstances, including system bottlenecks, slowdowns, or crashes. It is pivotal in ensuring the stability and availability of critical systems or applications that must handle heavy traffic or data loads, such as financial applications, online gaming platforms, or e-commerce applications. By conducting stress testing, organizations can maintain customer satisfaction and prevent profit loss by ensuring these systems remain robust even under extreme conditions.

Executing stress tests involves simulating an extremely high volume of traffic or data, often surpassing the system’s maximum capacity, to identify how the system performs under such conditions. Automated testing tools can achieve this simulation by generating a high volume of users, or individuals can manually input data and execute transactions. The methodology follows a progressive approach where the load gradually increases until the system reaches its breaking point. Observers closely monitor system performance beyond this point, examining how it recovers and handles the extreme load, thus gaining valuable insights into its robustness.

Despite its significant benefits, stress testing comes with its challenges. One of the primary challenges is determining the ‘right’ amount of stress or load to test the system’s limits without causing irreparable damage. This requires a deep understanding of the system’s architecture and its components. Another challenge is interpreting the stress test’s results, which can be complex. Unlike other forms of testing where clear pass/fail criteria exist, stress testing results are more nuanced. Although analysts expect the system to fail, they must carefully analyze at which point it fails and how it recovers.

Additionally, stress testing can be time-consuming and resource-intensive, especially for large systems with numerous components. Also, replicating a production-like environment that can emulate extreme conditions can be technically challenging and costly. Despite these challenges, the insights gained from stress testing are invaluable for enhancing system performance and robustness, making it an essential part of the performance testing regime.

3) Endurance Testing

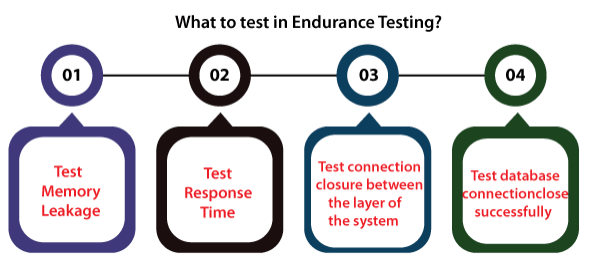

The methodology of endurance testing involves simulating a load over a prolonged period, which can range from several hours to days or even weeks, to observe how the system performs under sustained use. It requires creating realistic user scenarios and maintaining a consistent application load or system load.

Automated testing tools are typically employed for this process, although manual input of data and execution of transactions can also be a part of the methodology. The methods for endurance testing involve simulating a typical production load and then maintaining this load for an extended period, often several hours to days, depending on the system under test. The test is designed to reveal issues that only become noticeable over time, such as gradual degradation in response times, memory leaks, or resource exhaustion.

Endurance testing comes with its unique set of challenges. Firstly, it is time-consuming due to the need for long-running tests to simulate sustained usage effectively. This can often lead to delays in the development cycle if not planned and managed correctly.

Secondly, it can be resource-intensive, requiring a test environment that mirrors the production environment as closely as possible. Moreover, due to the extended duration of the test, any disruptions or inconsistencies in the test environment can impact the validity of the test results.

Lastly, detecting and diagnosing issues can be more complex in endurance testing. Issues such as memory leaks or resource exhaustion can be subtle and may require careful monitoring and analysis to detect and diagnose. Despite these challenges, endurance testing is a critical component of performance testing, providing insights and assurances that short-term testing methods cannot.

4) Spike Testing

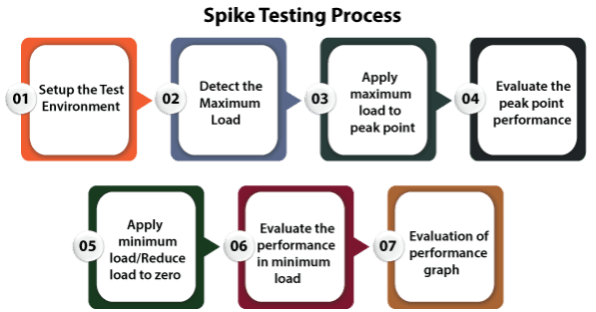

Spike testing is a specialized form of performance testing that examines the resilience and adaptability of an application or system under sudden, extreme increases in load, referred to as ‘spikes.’ These spikes often mimic real-world scenarios, such as a surge in user traffic during peak hours or unexpected events. Spike testing is essential to ensure an application or system’s robustness.

The primary objective of spike testing is to ascertain whether the application or system can efficiently manage unexpected surges in load without experiencing performance degradation or failure. In other words, it tests the system’s elasticity, ensuring it can scale up to meet demand and then scale back down as the spike subsides.

The methodology for spike testing involves intentionally injecting sudden, extreme loads onto the system and observing how it responds. Automated testing tools often achieve this by simulating an abrupt increase in traffic or enabling testers to recreate the spike.

Testers first subject the system to a standard load in a typical spike testing scenario. A spike is then introduced, significantly increasing the load temporarily. After the spike, the load returns to normal levels. Typically, testers repeat this cycle several times to evaluate the system’s capability to manage multiple load spikes.

One of the significant challenges with spike testing is the unpredictability of the results. Because spike testing involves testing the system’s response to sudden, extreme load increases, the results can vary widely based on factors such as the system’s architecture, available resources, and workload.

Another challenge is defining what constitutes a ‘spike.’ In real-world scenarios, spikes can vary greatly in duration, intensity, and frequency. Therefore, defining an appropriate spike for testing can be challenging and may require a deep understanding of the system’s usage patterns and potential load scenarios.

Lastly, accurately simulating a spike can be technically challenging. It requires sophisticated testing tools capable of generating and controlling extreme load levels. Also, interpreting the results of spike testing and identifying bottlenecks or performance issues requires a high degree of technical expertise.

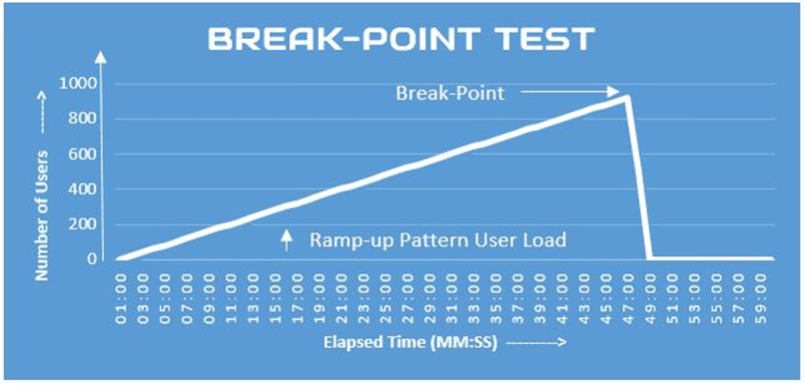

5) Break Point Testing

Breakpoint testing is a critical tool in a developer’s arsenal, used during software development to identify and correct defects in the code. In essence, a breakpoint is a marker set at a specific line in the code where a developer suspects a fault may be present. When the program runs, execution halts at the breakpoint, enabling the developer to thoroughly inspect the program’s state and behavior at that precise moment.

The purpose of breakpoint testing is two-fold. First, it aids in detecting defects in the code during the development phase. This preemptive approach ensures that the software functions correctly and is free of bugs before its release to end users. Second, it provides developers with a means to understand and navigate the flow of execution within the program, enhancing their ability to create high-quality and efficient code.

The methodology of breakpoint testing is straightforward and intuitive. A developer will start by setting a breakpoint at a specific line of code where a potential defect is suspected. Developers accomplish this by using a debugging tool or an integrated development environment (IDE), such as Visual Studio or Eclipse, both of which support setting breakpoints. The program pauses at each breakpoint when executed. This allows the developer to examine the program’s state at that exact line of code, including the values of variables, the state of memory, and the call stack. If the developer discovers an issue, they can modify the code to fix the problem, verify the fix by continuing the execution, and set new breakpoints as needed for further diagnosis and debugging of the program.

Despite its many benefits, breakpoint testing comes with its challenges. For one, it can be time-consuming, especially for larger, more complex programs with multiple potential fault points. Additionally, setting too many breakpoints might disrupt the flow of execution and make the debugging process more confusing.

Furthermore, it can be tricky to pinpoint the exact location for a breakpoint in complex codebases. Also, the issue of Heisenbugs, bugs that change their behavior when observed (such as when a breakpoint is set), can make defects elusive and harder to diagnose and fix. Despite these challenges, breakpoint testing remains an invaluable strategy in software development, helping to ensure the creation of reliable, high-performing software applications.

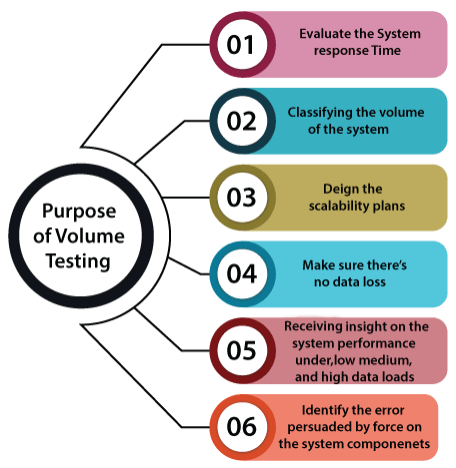

6) Volume Testing

Volume testing is a specialized form of performance testing designed to evaluate an application’s or system’s performance under a substantial volume of data or transactions. This form of testing is critical for systems expected to handle significant data quantities or perform a high number of transactions. The primary purpose of volume testing is to establish the maximum amount of data or transactions the system can handle while maintaining optimal performance levels. By identifying performance issues early, organizations can proactively mitigate potential performance problems, ensuring the system can handle future growth and scalability requirements.

To identify any limitations or bottlenecks in the system, executing volume testing involves simulating a high volume of data or transactions, often surpassing the maximum anticipated usage. This simulation can be achieved using automated tools that generate a high volume of data or transactions or manually entering data and performing transactions. The testing process begins by defining the test conditions and designing and creating test cases that generate the desired data volume. Testers then monitor the system for performance degradation, system failure, or other issues related to data handling.

Volume testing presents several challenges. One significant challenge is setting up a test environment that accurately reflects the production environment, especially regarding data volume. Testers may also face challenges in creating realistic test data covering all possible scenarios. Furthermore, analyzing the results of volume testing can be complex due to the high volume of data involved. Despite these challenges, volume testing remains crucial for systems expected to handle high data volumes, ensuring they can meet future growth and scalability demands.

7) Compatibility Testing

Compatibility testing is integral to software quality assurance, ensuring an application or system operates across various environments. Its primary goal is to validate the software’s compatibility with different hardware configurations, operating systems, network environments, browsers, and devices. Compatibility testing ensures that the end-user experience remains consistent and satisfactory, regardless of the myriad of technologies they might use to interact with the software.

The significance of compatibility testing has grown with the exponential rise of technology diversity. The myriad of device types, operating systems, browser versions, and network configurations a modern application must support can be staggering. Ensuring compatibility across all these configurations is vital to providing a positive user experience and maintaining a broad user base.

Compatibility testing can involve both manual and automated testing approaches. Manual testing might involve physically setting up different hardware configurations or using different devices to test the application. On the other hand, automated testing can use virtualization technology or device emulation software to simulate different environments, making the process faster and more efficient.

The most significant challenge in compatibility testing is the number of potential configurations. With numerous combinations of hardware, operating systems, browsers, and network environments, it is impossible to test them all thoroughly. Hence, testers need to prioritize based on user data and market share. Another challenge is the constant evolution of technology. Continual releases of new devices, operating system updates, and browser versions necessitate continuously updating the testing matrix.

Additionally, whether physical or virtual, maintaining the infrastructure for compatibility testing can be costly and complex. Despite these challenges, compatibility testing is essential in today’s diverse technology landscape to ensure that an application or system provides a consistent and satisfactory user experience across all supported configurations.

8) Latency Testing

Latency Testing is like checking how quickly someone responds to a text message. It’s all about measuring how long it takes for a system or application to react to our actions. In today’s fast-paced world, nobody wants to wait around for things to happen. Latency testing helps us ensure that our apps and systems are super responsive and provide a smooth user experience. To do this, we simulate user actions and see how long it takes the system to respond. We carefully measure these response times to pinpoint any delays or bottlenecks. This helps us identify areas where we can make things faster and more efficient. Of course, there are some challenges. Creating a testing environment that truly reflects how the system will be used in the real world can be tricky. And sometimes, it can be hard to understand exactly what the test results are telling us. But by understanding the latency of our systems, we can make them faster, more responsive, and ultimately more enjoyable for users.

9) Concurrent Client Testing

Imagine a popular online game with thousands of players all trying to log in and play at the same time. Will the game crash? Will everyone experience lag? Concurrent client testing helps us answer these questions. It’s like a stress test for our system, designed to see how it performs when many users are trying to access it at once. We simulate a large number of users interacting with the system simultaneously to see if it remains stable, responsive, and free of errors. This is crucial for applications like online games, streaming platforms, and e-commerce websites, especially during peak hours. We carefully monitor key metrics such as how long it takes for the system to respond, how much data it can handle, the number of errors that occur, and how much system resources are being used. While simulating many users and interpreting the results can be tricky, concurrent client testing is essential for ensuring a smooth and enjoyable experience for all users.

10) Throughput Testing

Throughput testing helps us understand how much work our software or system can handle in a given amount of time. Imagine a busy restaurant trying to figure out how many orders the kitchen can handle without things getting chaotic. That’s essentially what throughput testing does! We simulate a large number of requests to see how quickly the system can process them, how much data it can transfer, and how many users it can handle simultaneously. This is crucial for systems that need to handle a lot of traffic, such as websites with many visitors or systems that process large amounts of data. We use special tools to generate these requests and monitor the system’s response. While valuable, throughput testing can be challenging. Generating a realistic workload and interpreting the results require careful consideration. However, by understanding the limits of our system, we can ensure it can handle the demands of our users and keep things running smoothly.

Bonus: Benchmark Testing

Benchmark testing is a method of performance testing that measures an application’s efficiency by comparing it against established standards or the performance of other similar systems. It’s a key process for applications expected to perform at certain industry levels, like financial transaction systems, database servers, or cloud-based applications.

During benchmark testing, specific performance indicators such as transaction speed, system throughput, latency, and resource utilization are scrutinized. These indicators help in understanding how well an application performs in comparison to competitors or against a set of predefined criteria.

Executing benchmark testing can involve intricacies, such as simulating realistic operating conditions and analyzing comparative data. It is crucial to accurately interpret the results for effective performance optimization. Addressing areas where an application falls short can be resource-intensive, but it’s essential for ensuring that the application can deliver an optimal user experience and maintain a competitive edge in the market.

This passage also contains 122 words and offers a concise yet comprehensive overview of benchmark testing, similar in detail and complexity to the example provided on concurrent client testing.

Taking Measure of Testing Options

Performance testing is a critical aspect of software development that ensures applications and systems are ready to deliver optimal performance in real-world scenarios. Each type of performance testing plays a unique and vital role in this process.

- Load testing helps organizations understand how their application or system will perform under the expected user load, ensuring stability and availability even during peak usage.

- Stress testing enables teams to identify an application or system’s breaking points or safe usage limits, leading to improved resilience and robustness.

- Scalability testing ensures that the application can handle growth in terms of users, data volume, and transaction volume, making it indispensable for forward-looking development.

- Compatibility testing guarantees that the application or system functions correctly across various environments, including hardware, operating systems, network environments, and other software, ensuring a smooth user experience.

- Latency testing assesses the responsiveness of an application, which is crucial for applications requiring real-time interaction, thereby enhancing user satisfaction.

- Concurrent user testing and Throughput testing measure the system’s performance when multiple users access the application simultaneously and the amount of work the system can handle in a given time. These tests are critical for systems expecting high user interaction, ensuring seamless operation.

Best practices require development and quality assurance organizations to integrate performance testing into the early stages of the development cycle and conduct it regularly throughout. The importance of integrating a testing modality early in the development process cannot be overstated. This approach helps identify and address issues earlier, reducing the expense and complexity of fixes. Automating the testing process wherever possible should also be a high priority because it will enhance efficiency and consistency.

Looking to the future, as software systems become increasingly complex and user expectations for performance continue to rise, performance testing will only become more critical. With AI and machine learning advancements, we can expect more intelligent performance testing tools capable of predicting and diagnosing performance issues even more effectively.

If you’re looking to get started with load testing, be sure to sign up for a free trial of LoadView today!