Identifying performance issues before they affect the end user is vital to the success of any application in development. Users will avoid applications that do not perform well, with most opting to move on to software that has been properly tested.

Database load testing is an important step that developers must take to ensure performance is optimized before deployment. LoadView is one of the best tools to use for database load testing and has become extremely popular with developers.

This guide will provide you with everything you need to know about testing your database performance using LoadView. The basics of load testing and database software will also be covered to ensure a better understanding of the process.

How is Load Testing Relevant to Database Performance Issues?

Load testing is a type of software performance testing that simulates the expected traffic load on a system. The system or application Is then monitored for performance under the simulated load to check stress levels. This process will identify any issues or bottlenecks that may arise during higher loads so they can be worked out before reaching the end user.

Load testing is vital to the development process and ensures that a system can manage the expected load and still function properly. Systems and applications that have not been load-tested will often encounter unexpected issues that disrupt service for users.

Why Database Performance Matters

Database performance plays a large role in the overall performance of an application. The database is typically responsible for housing all of the vital data relevant to an application. If the database is not performing properly, the entire application will suffer.

Slow response times, timeouts, and system crashes can all result from an untested database that is not performing optimally. Indexing issues, slow database queries, and inefficient database design will all result in an application performing poorly, especially as the size of the database increases.

Common Database Performance Issues

Load testing is important for identifying several common database issues that may arise. Some of the most common issues that testing helps identify have been detailed in the sections below.

Insufficient Hardware Resources

If the hardware and resources allocated for the database are not sufficient, it is likely to cause performance issues. This could cause a database to perform slowly and cause intermittent crashes due to a lack of resources.

Load testing can help to ensure that the database has access to the resources needed to perform optimally under the anticipated load. Increasing the RAM, CPU, or disk space can help to resolve this issue.

Inefficient Database Queries

Inefficient queries to the database waste valuable resources that can ultimately slow down a database. Load testing can simulate realistic queries to ensure that test the database for optimal performance. Inefficient queries can be resolved by analyzing the query execution plan and identifying resource-intensive queries and optimizing them to improve performance.

Poor Database Design

A poorly designed database will often have significant performance issues. Load testing can be used to identify the impact of the design on the overall performance of the system or application. A poorly designed database will often have to be reworked and optimized for performance. This can include editing elements such as tables, indexes, and data types.

Poor Indexing Strategy

A poor indexing strategy can also affect the overall performance of a database. Load testing can help identify the overall impact of the current indexing strategy on performance. This process involves analyzing the indexing strategy and identifying areas that are affecting performance. Elements that are typically inspected for optimization include poorly indexed tables or columns.

Inadequate Database Configuration

The overall configuration of the database will also play a significant role in performance. Load testing will allow for different configurations to be tested to identify the one that is optimal for the expected load. Once the current database configuration has been analyzed, parameters that are affecting performance can be optimized.

Lack Of Realistic Test Data

Without the use of realistic test data, it is difficult to gauge how a database will perform under the expected load. Load testing can accurately represent the expected load to monitor how the database performs. Testing without realistic data can lead to inaccurate results, which is why using real-world scenarios is so important.

Inadequate Monitoring

Many problems with a database go unnoticed due to inadequate monitoring tools. Load testing with LoadView will provide all the tools needed to properly identify bottlenecks and performance issues. Having the right tools will make it easier to identify areas that need improvement. Crucial performance indicators like the CPU, RAM, and I/0 may all be monitored during testing to ensure accurate results.

Why Load Testing Is Essential for Database Performance

Load testing is the easiest and most effective way to test how a database performs under the expected load. This process can ensure that a database is working properly and help improve response times while lowering the frequency of crashes and timeouts before it reaches the end user.

After load testing, bottlenecks, scalability issues, and inefficiencies in the database architecture can all be identified and resolved to optimize performance. In addition, testing will help highlight slow queries, poor indexing, and other issues that may cause poor performance.

Simulating the expected load on the system will help to determine how well the database performs with increasing amounts of data and users. The simulation of real-world usage will also help to evaluate the effectiveness of the database’s design.

Choosing not to load-test a database will often lead to performance issues that are not discovered until the application is in production. This leads to user frustration, data loss, and potential loss of revenue. All of these issues can be avoided by simply load-testing the database before release.

Database Issues That Load Testing Can Reveal

Load testing can be used in several different ways to identify database performance issues. Some of the most common ways that load testing can be used to identify database performance issues have been detailed below.

Identifying Bottlenecks

Load testing is especially helpful for identifying bottlenecks in the system that are affecting database performance. For example, if there are slow queries or inefficient indexing, load testing can help highlight those issues and pinpoint where the bottleneck is occurring.

Measuring Scalability

Load testing can also measure a database’s level of scalability. This is done by simulating a steadily increasing load on the system to measure how the database handles increases in data and users. If the performance of the database is not expected, then it can be redesigned with scalability in mind.

Evaluating Database Design

By simulating real-world usage patterns, load testing can measure the effectiveness of a database’s design. The database schema may be analyzed and optimized for performance in areas that need improvement.

Measuring Response Times

Load testing may also be used to measure database response times. If the database is not adequately optimized, query responses may be slower than normal. Testing can help identify the response time and alter developers if the response time is poor.

Measuring Resource Utilization

Load testing can also identify whether or not a database is using resources optimally. A poorly optimized database will often use more resources than necessary, including CPU and memory, causing poor application performance.

Database Deadlocks

Deadlock in a database occurs when multiple queries are accessing the same data at the same time. This will lead to performance issues including poor response times and crashes. Load testing can identify areas of the database where deadlocks are possible so they can be remedied.

Database Indexing Issues

Load testing can also identify indexing issues by evaluating the indexing strategy. A poor indexing strategy can result in slow response time and overall poor application performance.

Load Testing a Database With LoadView: Step-By-Step

Now that all of the basics of database performance and load testing have been covered, the following steps can be used to load test a database using LoadView. The process has been streamlined due to the easy-to-use interface for creating and running tests that LoadView provides.

Step 1: Create A New Test In Load View

- In LoadView, Select the load testing option and you can see the ‘ new test’ button in the top right corner.

- Create a New Test and Select Web Application as the Load Testing type

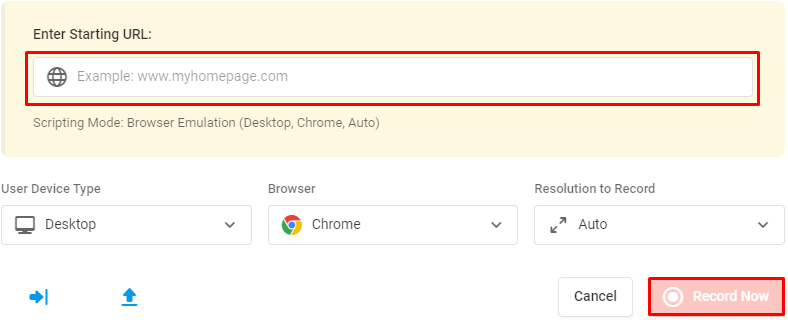

- Enter the URL of the application or website and click Record Now.

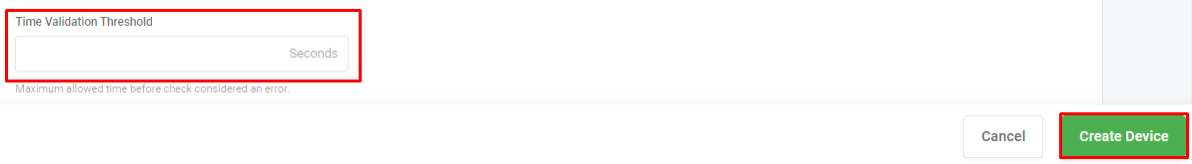

- In Configure Task, select the maximum time allowed before the check considers an error in the Time validation Threshold. Add the time limit in seconds.

- Click Create a Device and Validate the URL in the Device section

Step 2: Select Load Type

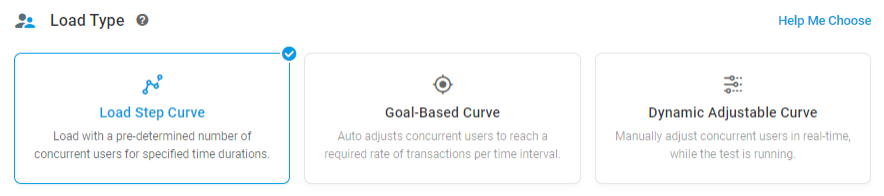

There are three different types of loads we can perform:

- Load Step Curve: Load with a pre-determined number of concurrent users for specified time durations.

- Goal-Based Curve: Auto adjusts concurrent users to reach a required rate of transactions per time interval.

- Dynamic Adjustable Curve: Manually adjust concurrent users in real-time, while the test is running.

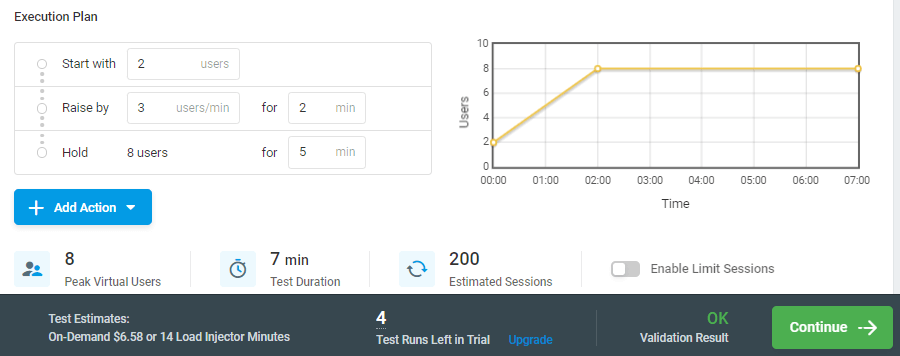

Now, we are using the Load Step Curve:

- Under the execution plan, we can modify the option to start with how many users and also set the user count to raise.

- In Load Injector Geo Distribution – Select the zone region for our load testing

- Click continue and start the test to run.

Step 3: Run Test and Analyze Results

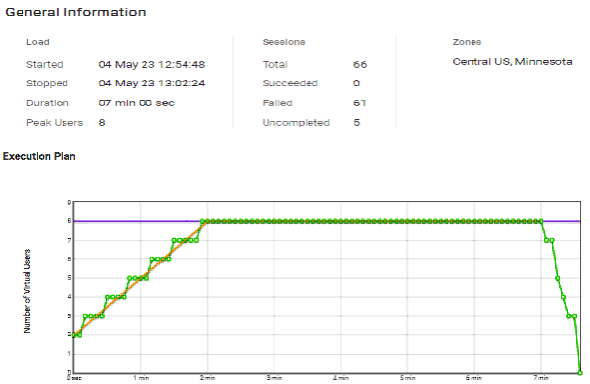

Under General Information we can see the Load, Sessions, and Zone:

- In Execution Plan – We can see the graph of the maximum no of users or loads performing.

- In Average Response Time – We can see the number of sessions started, average response time taken, session counts and errors.

- Load Injector Load- It shows the Percentage of CPU Load taken by each session.

Note: We have a similar graph for Average Response Time and Load Injector Load.

We can see the details logs of the sessions, it has steps we are performing in the application, and each step has the starting time, duration taken(ms), and status. We can also filter the logs by zone, success, and failure sessions.

Supercharge your database performance and ensure a flawless user experience with LoadView’s powerful load testing capabilities. Don’t leave your database’s performance to chance – take control and optimize it for success.

Sign up for a free trial of LoadView today and experience the difference firsthand!