Each application component of service we write requires some resources in order to execute and function properly. Predicting exactly how much resources is needed can be almost impossible, as there are a lot of moving parts that can influence it. Amount of memory, CPU, or network bandwidth needed can change using application life cycle as the volume of work changes. Almost all applications have performance requirements we must always meet. As the workload changes, we must be able to maintain desired performance level. This is where Azure Autoscale comes into play, as it is a mechanism, we can use to achieve this.

Autoscaling

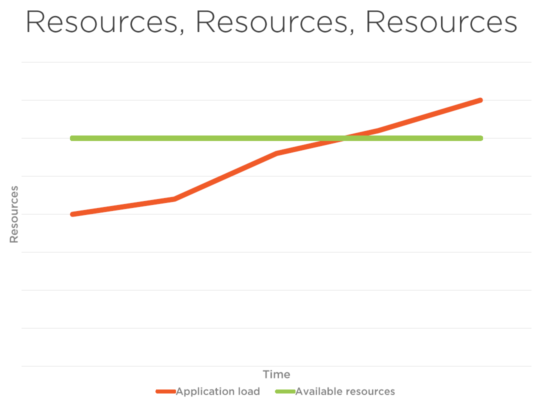

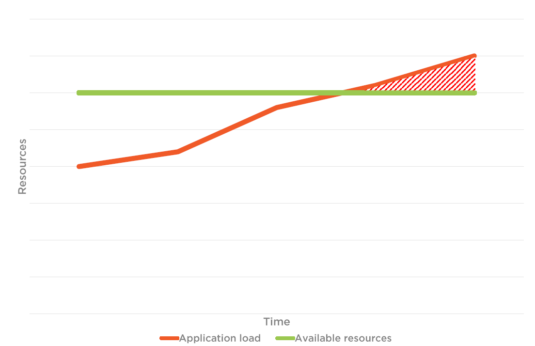

In figure 1 below, there is the application load and total limit of the resources. When autoscale is not into place, the users which are connected and the users which are going to connect to the web application, might face performance issues due to the limitation of the resources available and it reaches the threshold point as depicted in figure 1.2 and cannot cope up with the traffic. However, when you look at figure 2, you can see, with traffic and application load, available resources increase simultaneously. This is the advantage of autoscaling.

Figure 1

Figure 1.2

Figure 2

Compute Solutions in Azure

- App Service. Azure App Service is a fully managed web hosting service for building web apps, mobile back ends and RESTful APIs. From small websites to globally scaled web applications, Azure has the pricing and performance options and that fit your needs.

- Azure Cloud Services. Azure Cloud Services is an example of a platform as a service (PaaS). Like Azure App Service, this technology is designed to support applications that are scalable, reliable, and inexpensive to operate. You can install your own software on VMs that use Azure Cloud Services, and you can access them remotely.

- Azure Service Fabric. Azure Service Fabric is a distributed systems platform that makes it easy to package, deploy, and manage scalable and reliable microservices and containers. Service Fabric represents the next-generation platform for building and managing these enterprise-class, tier-1, and cloud-scale applications running in containers.

- Azure Functions. Azure Functions allow developers to act by connecting to data sources or messaging solutions thus making it easy to process and react to events. Developers can leverage Azure Functions to build HTTP-based API endpoints accessible by a wide range of applications, mobile and IoT devices.

- Virtual Machines. Azure Virtual machine will let us create and use virtual machines in the cloud as Infrastructure as a Service. We can use an image provided by Azure, or partner, or we can use our own to create the virtual machine.

Types of Autoscaling

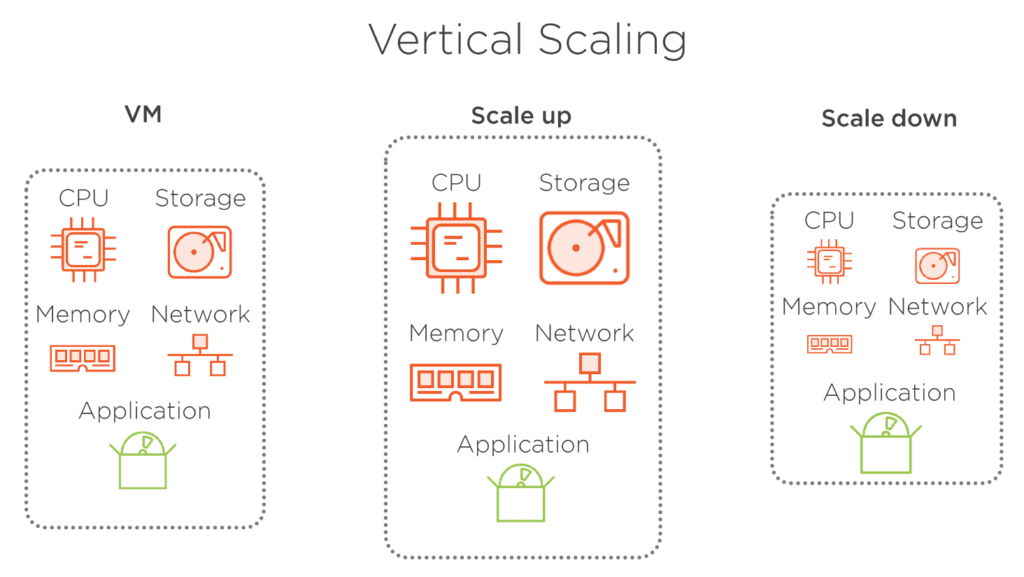

Vertical Autoscaling

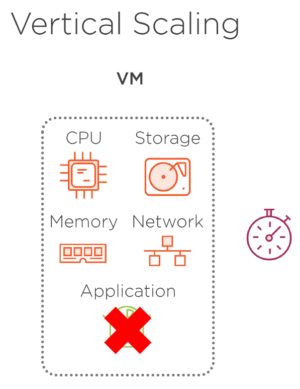

Vertical scaling means we modify the size of a VM. We scale up if we need a bigger VM that has more hardware resources, and, on the other hand, we scale down in case we do not need all the resources available and we want to decrease the size of a VM. Our application that is hosted in this VM remains unchanged in both cases. This type of scaling is not very efficient, especially in cloud environment, as resource consumption is not optimized. Another main drawback is that virtual machine needs to be stopped for its size to change. This affects our application as it must get offline while the VM is stopped, resized, and restarted, and these actions are usually time consuming. Of course, we could keep our VM unchanged, but instead provision a new virtual machine with desired size and move our application once the new VM is ready. This still requires our application to be offline while it moves, but the process of moving the app is a lot shorter.

Figure 3

Figure 3.1 – Application becomes unavailable when vertical scaling is put into place as it takes time to restart.

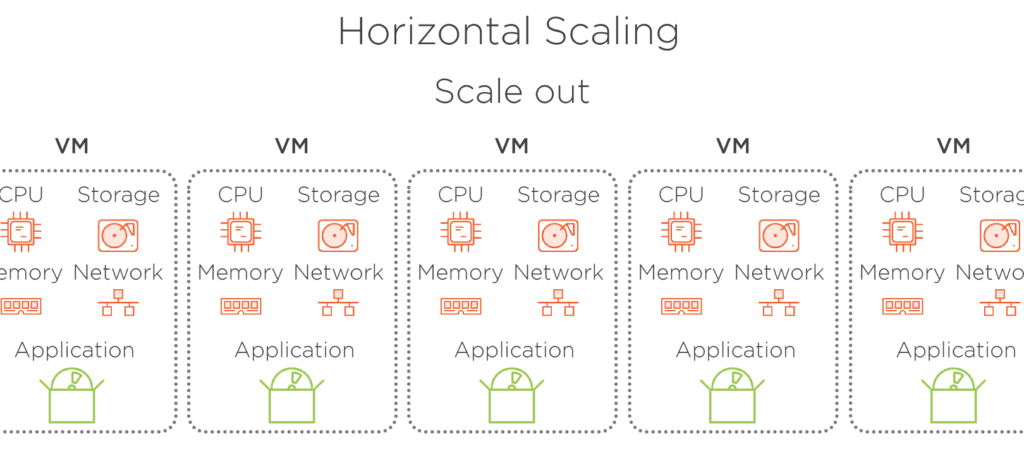

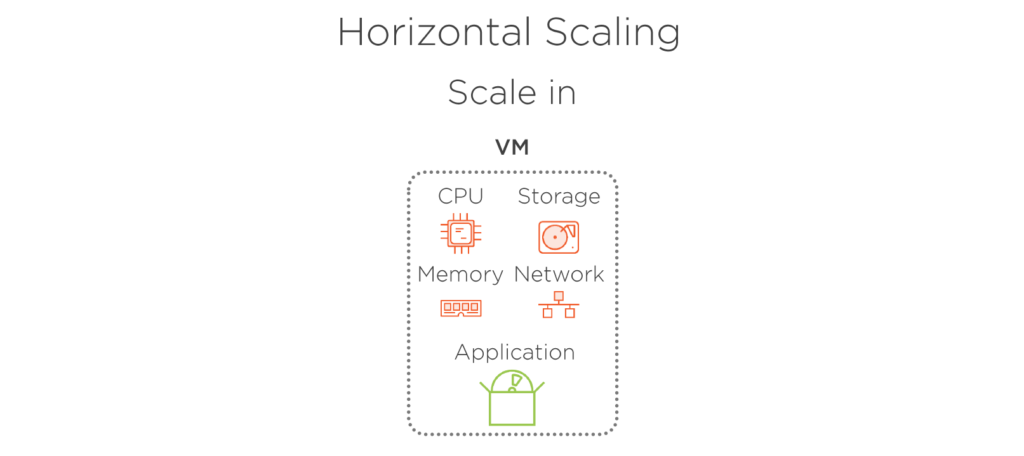

Horizontal Autoscaling

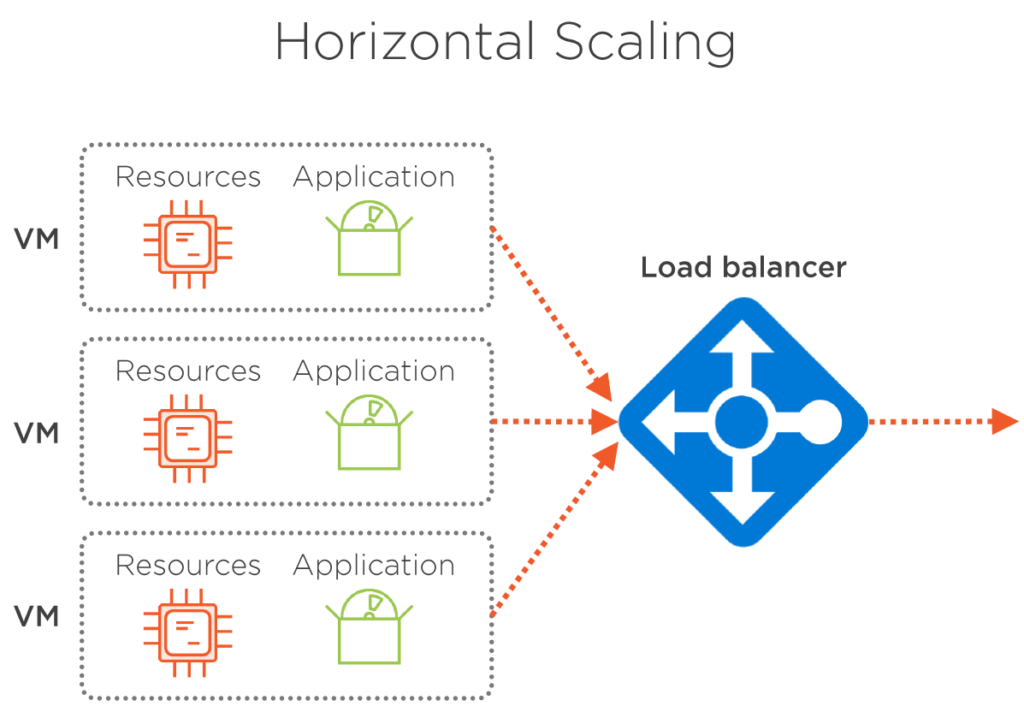

Horizontal scaling means we modify the number of running VMs and maintain desired performance by dividing the load among several instances of the same VM. The size of virtual machine remains the same. We only increase the number of them by scaling out, or we decrease the number of running VMs at one moment by scaling in. By using this approach, we can start with small VM size and keep the resource consumption as optimal as possible. Also, there is no downtime for our application, as there will always be at least one instance of the app running. Horizontal scaling requires a load balancer in order to distribute the load among running VMs evenly. But luckily for us, Azure does this out of the box with zero action needed from our side.

Figure 5. Scaled out when traffic increases.

Figure 5.1 – Scaled out when traffic decreases.

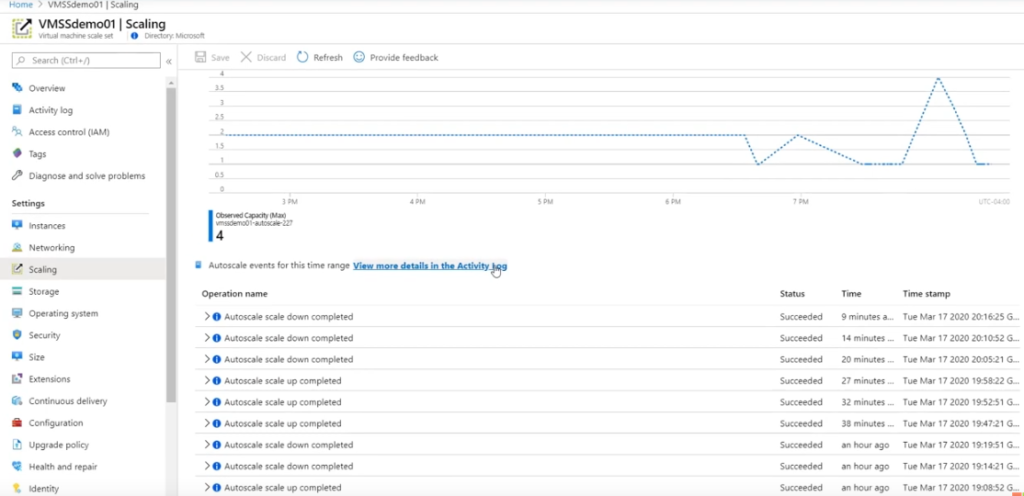

Monitoring and Alerts

There are many ways with you can monitor if additional resources are added on Azure to a service, some of which are quite complicated, for example, the Scaling blade of a service (Virtual Machine Scale Sets in this case, Figure 6). This is a method which is preferred by administrators but not by the owners and contributors. For the users to to be able to view, we need to be logged in to the Azure portal which, can be quite time consuming for users.

Figure 6

Alerts

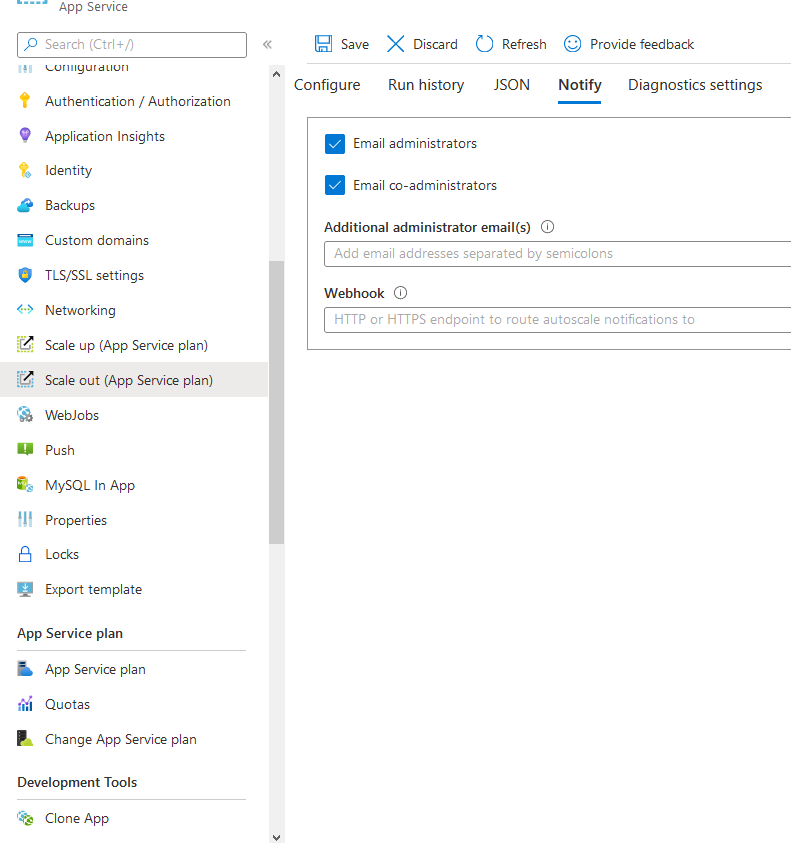

You can choose to notify users when the auto scale out and scale in happens (App service).

Figure 7

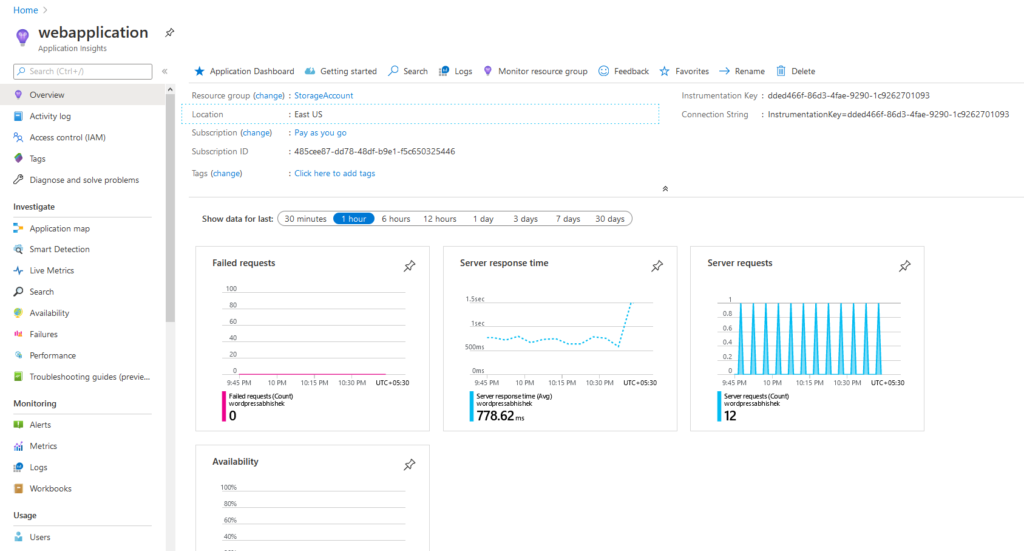

By default, application insights is used for the app service which gives us the insights into the server response time, requests, etc.

Figure 8. Application Insights showing the average server response time with the requests an app service receives.

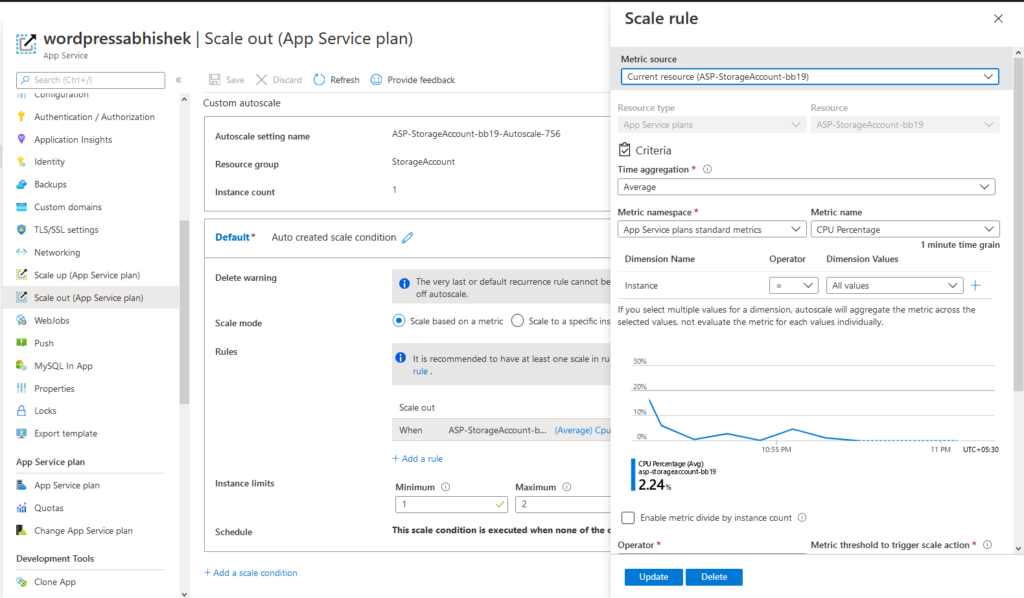

This is how to configure autoscaling in an app service. First, go to Scale-out > Configure > Add Scale condition > Select appropriate metric like CPU, RAM, requests, etc. > Save and it’s done.

Figure 9. Configuring the auto-scale conditions in an app service plan

When you use Azure Autoscale, you don’t have to worry about how to implement load balancers, traffic managers, etc. Azure manages everything.

Note: Standalones VMs need additional configuration. However, Virtual Machine Scale Sets require no administrative action when autoscaling. Load balancers are automatically created.

Azure App Services have a blind autoscaling method which is handled by Azure and you see no service used individually in the resources. Instead, it removes any administrative overhead. Users feel little to no performance issues keeping in mind the autoscaling is achieved. Azure handles most of the autoscaling part, apart from specifying the auto scale conditions, there is not much left for the user to do. Everything is handled smoothly.

In Figure 10, there is a VMSS (Virtual Machine Scale Sets) which scale automatically when the conditions you mention prove to be true.

Figure 10. Virtual Machine Scale Sets with Load Balancer.

Testing Azure Autoscale

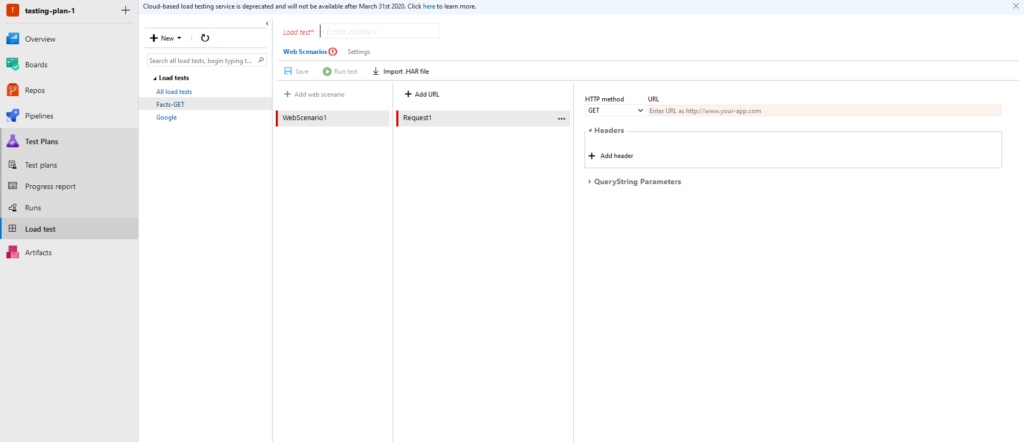

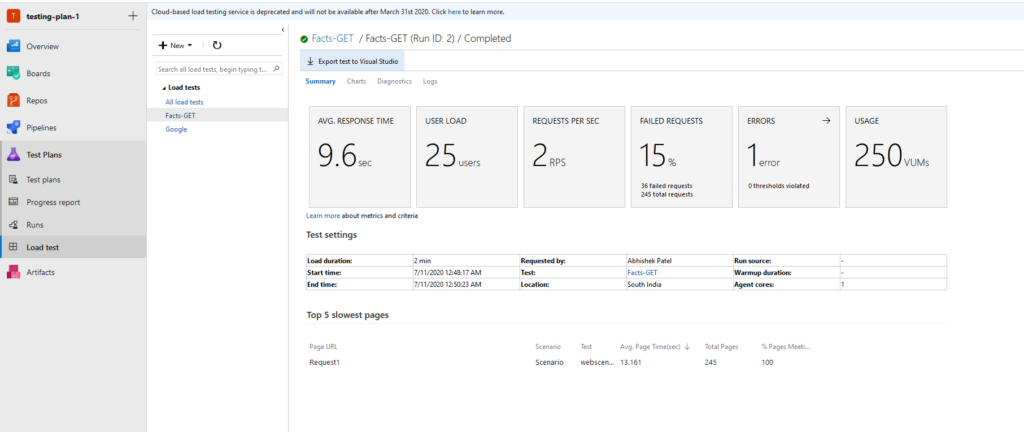

Testing is an integral part of a web application. Without testing, we can’t know for sure if the web server can handle the traffic, for it, we perform tests. Stress testing, load testing are the few examples of testing. For it to be purely handled on Azure, sign up for a DevOps organization within the Azure portal, create a project and then you will be redirected to the following page:

Figure 11. DevOps Dashboard for Testing

Figure 12. You can add URLs for testing purpose and look at the metrics of the service that is being used for testing.

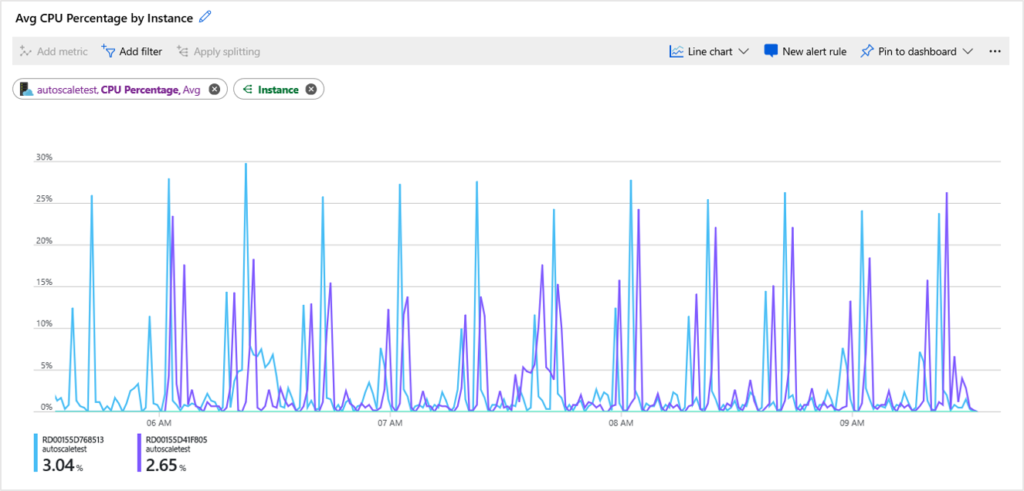

Figure 13. CPU graph of the tested application that is on VM.

Figure 14. Sample Results for an GET API with details such as response time, user load, requests per second, etc.

Using LoadView to Verify That Azure Autoscale Works Properly

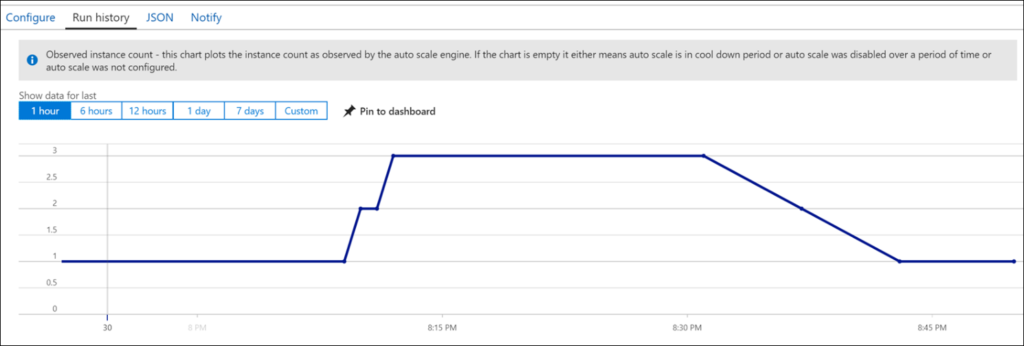

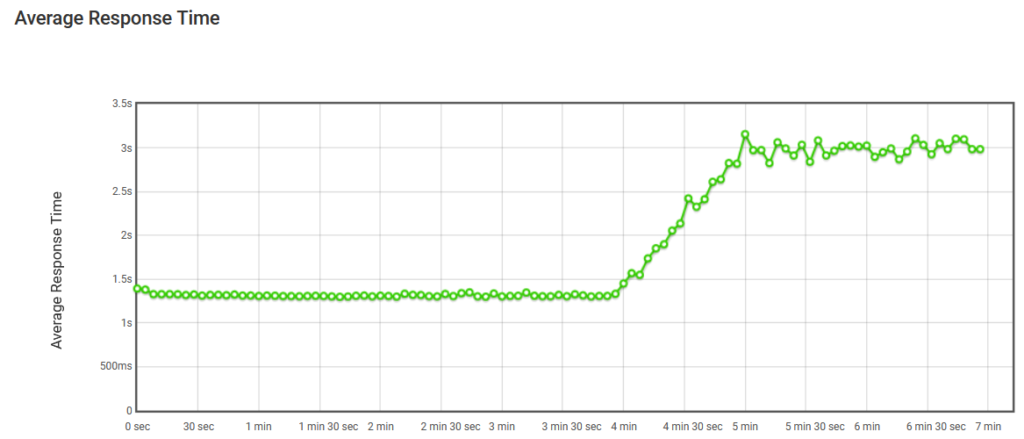

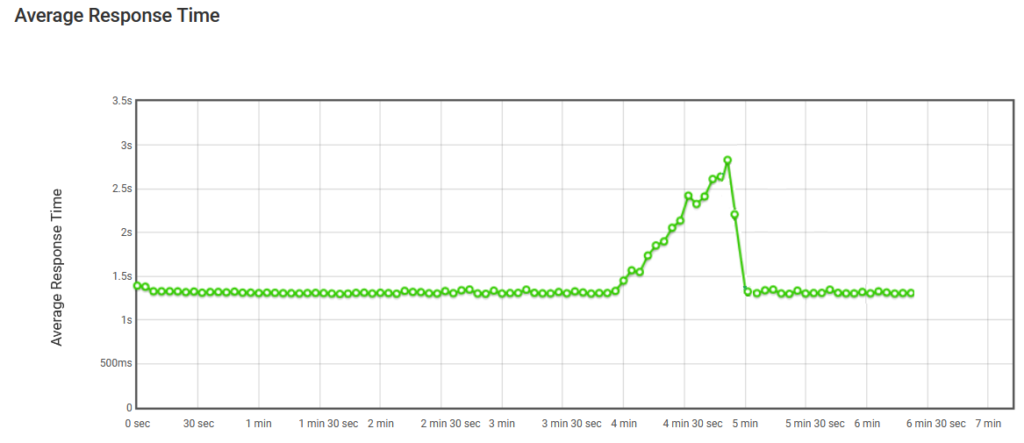

As we know by now, when an amount of CPU, RAM and IO is reached, autoscaling occurs. Here in Figure 15, this graph is included in a report provided by LoadView when you run a test against a certain URL or endpoint. The first graph has a constant number of users visiting the site as per our load test strategy and so after on point, the average response time increases substantially.

Figure 15. Average Response Time Without Autoscale

However, with autoscaling comes advantages. In Figure 16, when users increase, our instance hosting the web application scale out as per conditions and so the average response times is not affected once the autoscaling is completed. When users no longer are connected, the instances that were created for handling the unpredictable load are terminated and only the initial count remains intact.

Figure 16. Average Response Times with Autoscale

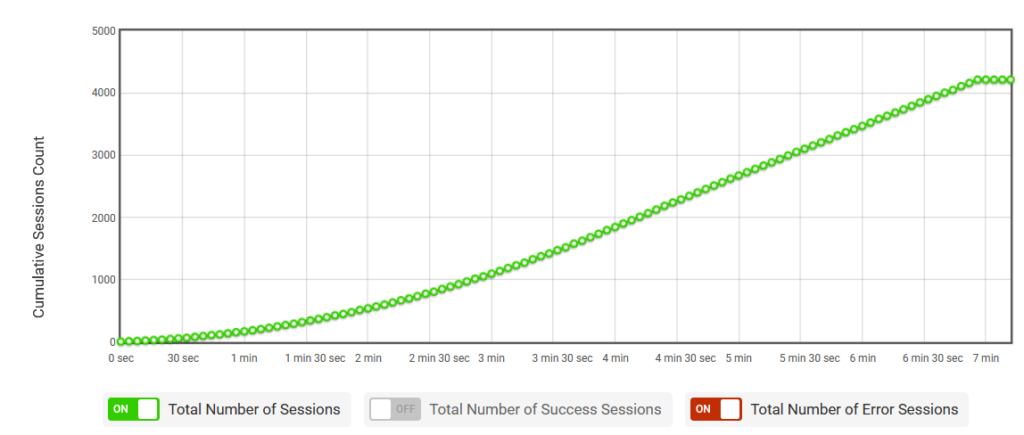

In Figure 17, the load test provided by LoadView provides a way to test the app with the help of session when sessions keep increasing which helps in proper testing and whether the app auto scales or not.

Figure 17. The number of cumulative sessions

Testing Azure Autoscale: Conclusion

When you implement Microsoft Azure Autoscale, you don’t have to worry about how to implement load balancers, traffic managers, etc. Azure manages everything and makes sure the correct amount of resources are running to handle the load of your applications your testing. However, using a solution like LoadView ensures that Autoscale is running correctly and that your users don’t experience any performance degradation as resources are added.

Try LoadView for yourself and received up to 5 free load tests to start.